Chinchilla vs Terracotta

In the face-off between Chinchilla vs Terracotta, which AI Large Language Model (LLM) tool takes the crown? We scrutinize features, alternatives, upvotes, reviews, pricing, and more.

When we put Chinchilla and Terracotta head to head, which one emerges as the victor?

If we were to analyze Chinchilla and Terracotta, both of which are AI-powered large language model (llm) tools, what would we find? The upvote count is neck and neck for both Chinchilla and Terracotta. You can help us determine the winner by casting your vote and tipping the scales in favor of one of the tools.

Feeling rebellious? Cast your vote and shake things up!

Chinchilla

What is Chinchilla?

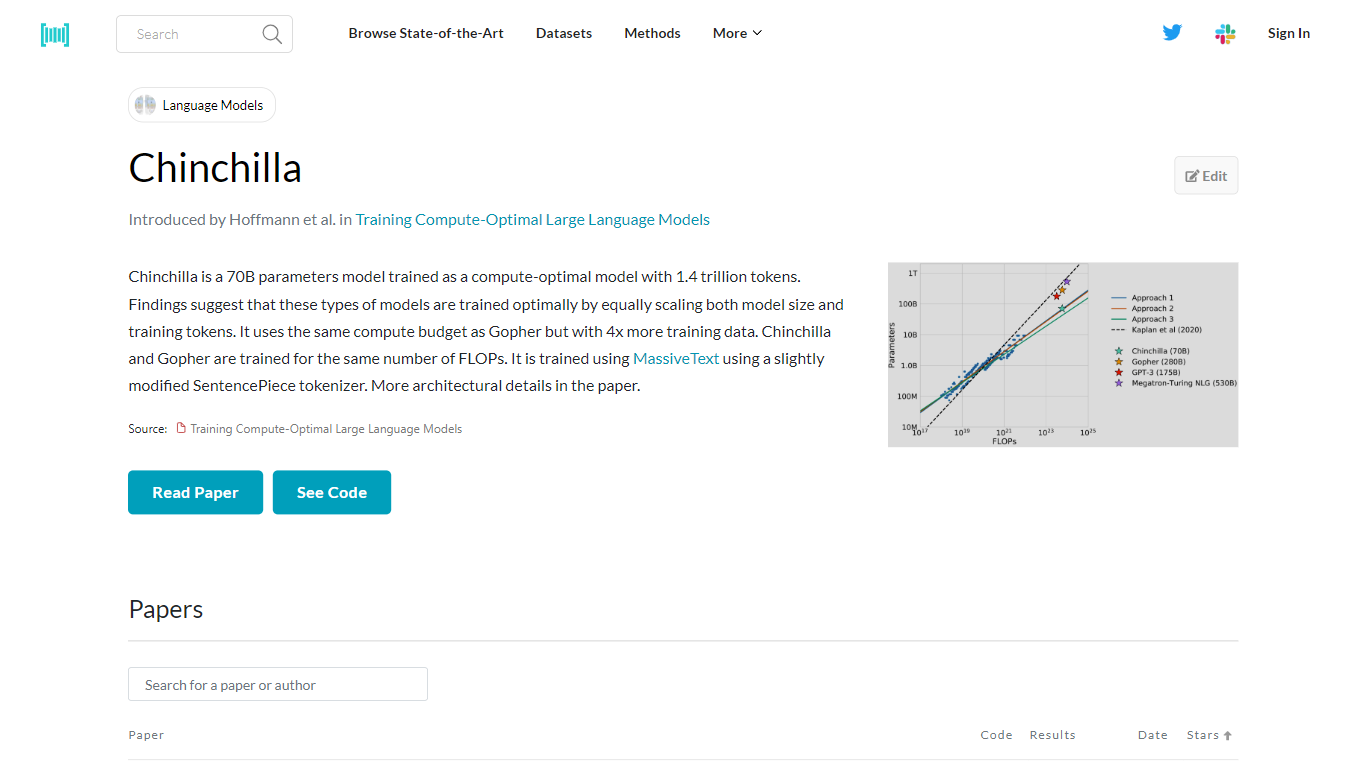

Chinchilla is an advanced artificial intelligence model with 70 billion parameters, developed to optimize both model size and the volume of training data for efficient learning. It was trained using an extraordinary 1.4 trillion tokens, with an emphasis on scaling the model and data proportionately. This method of training is based on research that suggests optimal training occurs when model size and training tokens are increased in tandem. Chinchilla shares its compute budget with another model named Gopher, but it distinguishes itself by leveraging four times more training data. Despite this difference, both models are designed to operate under the same number of FLOPs, ensuring efficient compute resource utilization. Chinchilla leverages MassiveText, a vast dataset, and employs an adaptation of the SentencePiece tokenizer to interpret and process data. For a detailed understanding of its architecture and training, one can refer to the paper that elaborates on these aspects.

Terracotta

What is Terracotta?

Terracotta is a cutting-edge platform designed to enhance the workflow for developers and researchers working with large language models (LLMs). This intuitive and user-friendly platform allows you to manage, iterate, and evaluate your fine-tuned models with ease. With Terracotta, you can securely upload data, fine-tune models for various tasks like classification and text generation, and create comprehensive evaluations to compare model performance using both qualitative and quantitative metrics. Our tool supports connections to major providers like OpenAI and Cohere, ensuring you have access to a broad range of LLM capabilities. Terracotta is the creation of Beri Kohen and Lucas Pauker, AI enthusiasts and Stanford graduates, who are dedicated to advancing LLM development. Join our email list to stay informed on the latest updates and features that Terracotta has to offer.

Chinchilla Upvotes

Terracotta Upvotes

Chinchilla Top Features

Compute-Optimal Training: A 70B parameter model trained with a focus on ideal scaling of model size and training data.

Extensive Training Data: Utilizes 1.4 trillion tokens, indicating a rich and diverse dataset for in-depth learning.

Balanced Compute Resources: Matches the compute budget of Gopher while offering 4x the amount of training data.

Efficient Resource Allocation: Maintains training under the same number of FLOPs as its counterpart, Gopher.

Utilization of MassiveText: Trains using a slightly modified SentencePiece tokenizer on the MassiveText dataset, providing a vast corpus for model learning.

Terracotta Top Features

Manage Many Models: Centrally handle all your fine-tuned models in one convenient place.

Iterate Quickly: Streamline the process of model improvement with fast qualitative and quantitative evaluations.

Multiple Providers: Seamlessly integrate with services from OpenAI and Cohere to supercharge your development process.

Upload Your Data: Upload and securely store your datasets for the fine-tuning of models.

Create Evaluations: Conduct in-depth comparative assessments of model performances leveraging metrics like accuracy BLEU and confusion matrices.

Chinchilla Category

- Large Language Model (LLM)

Terracotta Category

- Large Language Model (LLM)

Chinchilla Pricing Type

- Freemium

Terracotta Pricing Type

- Freemium