Local AI vs mshumer/gpt-prompt-engineer - GitHub

In the contest of Local AI vs mshumer/gpt-prompt-engineer - GitHub, which AI Model Generation tool is the champion? We evaluate pricing, alternatives, upvotes, features, reviews, and more.

If you had to choose between Local AI and mshumer/gpt-prompt-engineer - GitHub, which one would you go for?

When we examine Local AI and mshumer/gpt-prompt-engineer - GitHub, both of which are AI-enabled model generation tools, what unique characteristics do we discover? There's no clear winner in terms of upvotes, as both tools have received the same number. Your vote matters! Help us decide the winner among aitools.fyi users by casting your vote.

Think we got it wrong? Cast your vote and show us who's boss!

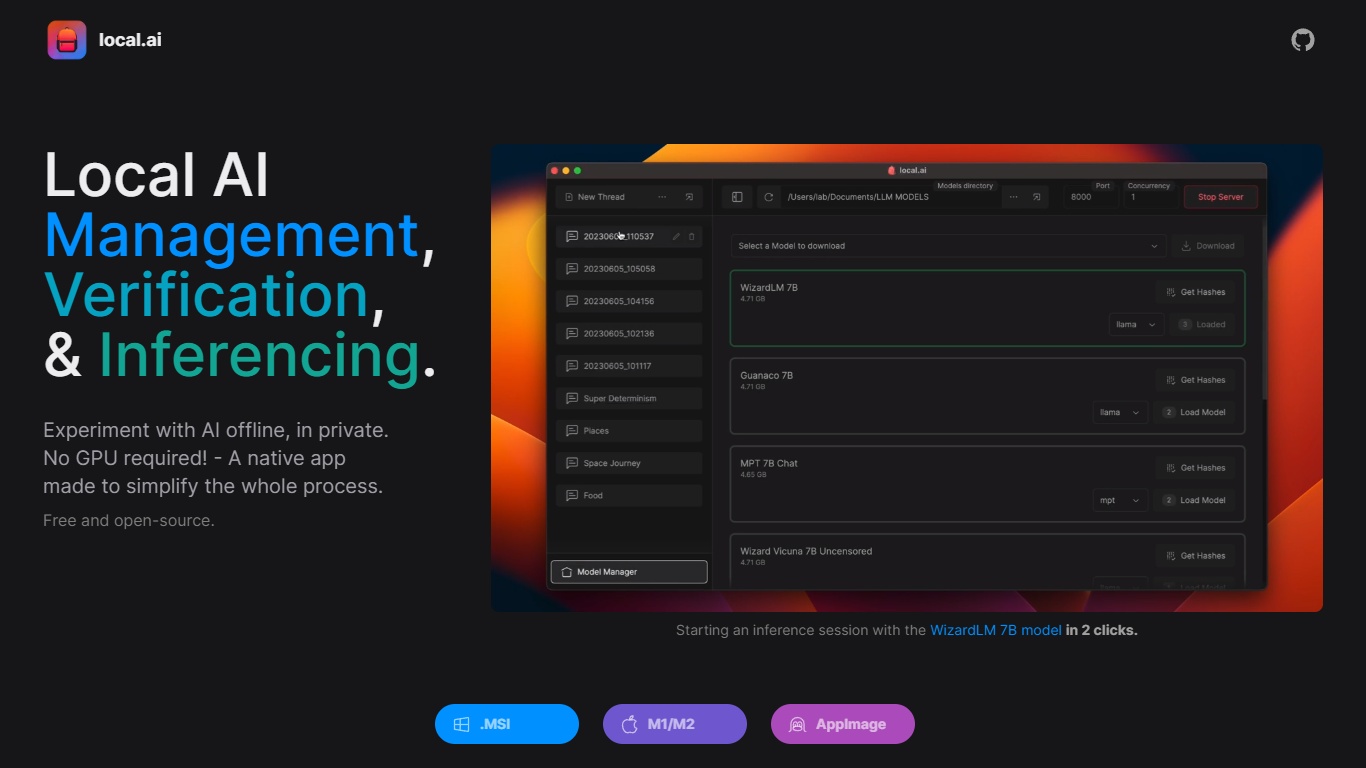

Local AI

What is Local AI?

The Local AI Playground is a native application designed to facilitate experimentation with AI models locally without any technical setup or GPU requirement.

This user-friendly app serves as a powerful tool that offers a wide range of functionalities, from local AI management to model verification and inferencing, ensuring privacy and ease of use. With Local AI Playground, users can take advantage of CPU Inferencing which adapts to available threads and employs GGML quantization methods.

It's a memory-efficient and compact solution, with the Rust backend ensuring the app size remains under 10MB for various platforms such as Mac M2, Windows, and Linux .deb.

The application supports model management, enabling users to track their AI models in a centralized location with features like resumable downloads and usage-based sorting.

It further guarantees the integrity of models with BLAKE3 and SHA256 digest computation features. For those looking to harness AI capabilities offline, Local AI Playground with its streaming server feature is the ideal choice, being both free and open-source.

mshumer/gpt-prompt-engineer - GitHub

What is mshumer/gpt-prompt-engineer - GitHub?

The GitHub repository "mshumer/gpt-prompt-engineer" is designed as a tool to optimize and streamline the process of prompt engineering for AI models. By effectively utilizing GPT-4 and GPT-3.5-Turbo, it aids users in generating a variety of prompts based on defined use-cases and testing their performance. The system ranks prompts using an ELO rating system, allowing users to identify the most effective ones for their needs. This tool is a boon for developers and researchers who are looking to enhance interaction with AI language models and can be beneficial for tasks across various domains, including content creation, data analysis, and innovation in AI-powered applications.

Local AI Upvotes

mshumer/gpt-prompt-engineer - GitHub Upvotes

Local AI Top Features

Compact and Efficient: Memory-efficient native app with a Rust backend, under 10MB in size.

CPU Inferencing: Utilizes available CPU threads with GGML quantization for efficient performance.

Model Management: Centralized tracking and management of AI models with an easy-to-use interface.

Digest Verification: Integrity of AI models ensured through BLAKE3 and SHA256 digest compute features.

Streaming Server: Simple setup for local AI model inferencing with a streaming server.

mshumer/gpt-prompt-engineer - GitHub Top Features

Prompt Generation: Leverages GPT-4 and GPT-3.5-Turbo to create potential prompts.

Prompt Testing: Evaluates prompt efficacy by testing against set cases and analyzing performance.

ELO Rating System: Ranks prompts based on competitive performance to determine effectiveness.

Classification Version: Specialized for classification tasks matching outputs with expected results.

Portkey & Weights & Biases Integration: Offers optional logging tools for detailed prompt performance tracking.

Local AI Category

- Model Generation

mshumer/gpt-prompt-engineer - GitHub Category

- Model Generation

Local AI Pricing Type

- Freemium

mshumer/gpt-prompt-engineer - GitHub Pricing Type

- Freemium