Claude 3 \ Anthropic vs Distil*

Explore the showdown between Claude 3 \ Anthropic vs Distil* and find out which AI Large Language Model (LLM) tool wins. We analyze upvotes, features, reviews, pricing, alternatives, and more.

When comparing Claude 3 \ Anthropic and Distil*, which one rises above the other?

When we contrast Claude 3 \ Anthropic with Distil*, both of which are exceptional AI-operated large language model (llm) tools, and place them side by side, we can spot several crucial similarities and divergences. Interestingly, both tools have managed to secure the same number of upvotes. Since other aitools.fyi users could decide the winner, the ball is in your court now to cast your vote and help us determine the winner.

Think we got it wrong? Cast your vote and show us who's boss!

Claude 3 \ Anthropic

What is Claude 3 \ Anthropic?

Discover the future of artificial intelligence with the launch of the Claude 3 model family by Anthropic. This groundbreaking introduction ushers in a new era in cognitive computing capabilities. The family consists of three models — Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus — each offering varying levels of power to suit a diverse range of applications.

With breakthroughs in real-time processing, vision capabilities, and nuanced understanding, Claude 3 models are engineered to deliver near-human comprehension and sophisticated content creation.

Optimized for speed and accuracy, these models cater to tasks like task automation, sales automation, customer service, and much more. Designed with trust and safety in mind, Claude 3 maintains high standards of privacy and bias mitigation, ready to transform industries worldwide.

Distil*

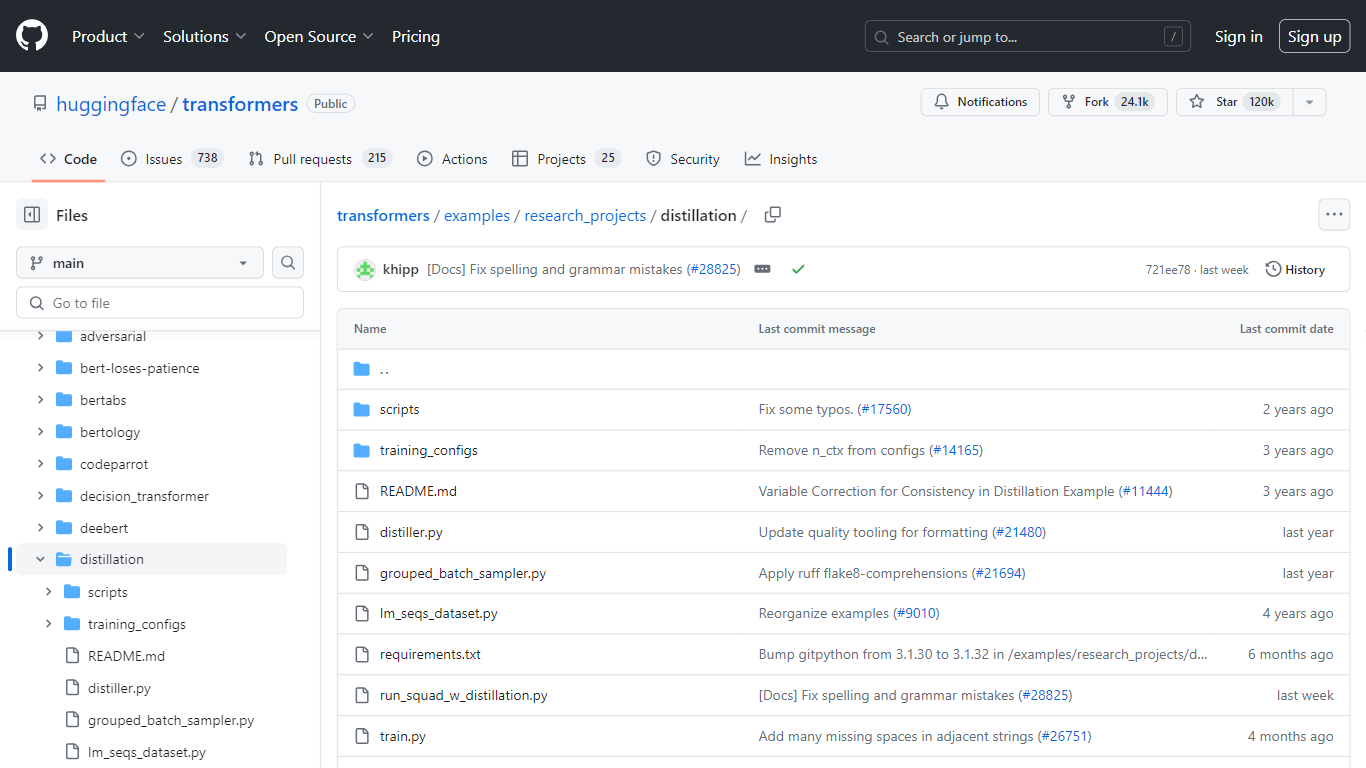

What is Distil*?

Discover cutting-edge machine learning with Hugging Face Transformers, which offers state-of-the-art models for Pytorch, TensorFlow, and JAX. Dive into the 'distillation' research project on GitHub to explore how knowledge distillation techniques can compress large, complex models into smaller, faster counterparts without significantly sacrificing performance.

This part of the Hugging Face Transformers repository contains examples and scripts that demonstrate the training and implementation of distilled models such as DistilBERT, DistilRoBERTa, and DistilGPT2. Learn from the detailed documentation and updates about the ongoing improvements, and understand how these models can be used in practical applications for efficient natural language processing.

Claude 3 \ Anthropic Upvotes

Distil* Upvotes

Claude 3 \ Anthropic Top Features

Next-Generation AI Models: Introducing the state-of-the-art Claude 3 model family, including Haiku, Sonnet, and Opus.

Advanced Performance: Each model in the family is designed with increasing capabilities, offering a balance of intelligence, speed, and cost.

State-Of-The-Art Vision: The Claude 3 models come with the ability to process complex visual information comparable to human sight.

Enhanced Recall and Accuracy: Near-perfect recall on long context tasks and improved accuracy over previous models.

Responsible and Safe Design: Commitment to safety standards, including reduced biases and comprehensive risk mitigation approaches.

Distil* Top Features

Scripts and Configurations: Examples and necessary scripts for training distilled models.

Updates and Bug Fixes: Regular updates and bug fixes documented for improved performance.

Detailed Documentation: In-depth explanations and usage instructions for each model.

State-of-the-art Models: Access to high-performance models that are optimized for speed and size.

Multilingual Support: Models like DistilBERT support multiple languages, increasing the versatility of applications.

Claude 3 \ Anthropic Category

- Large Language Model (LLM)

Distil* Category

- Large Language Model (LLM)

Claude 3 \ Anthropic Pricing Type

- Freemium

Distil* Pricing Type

- Free