Megatron-LM vs GPT-4

When comparing Megatron-LM vs GPT-4, which AI Large Language Model (LLM) tool shines brighter? We look at pricing, alternatives, upvotes, features, reviews, and more.

Between Megatron-LM and GPT-4, which one is superior?

When we put Megatron-LM and GPT-4 side by side, both being AI-powered large language model (llm) tools, GPT-4 is the clear winner in terms of upvotes. GPT-4 has 9 upvotes, and Megatron-LM has 6 upvotes.

Don't agree with the result? Cast your vote and be a part of the decision-making process!

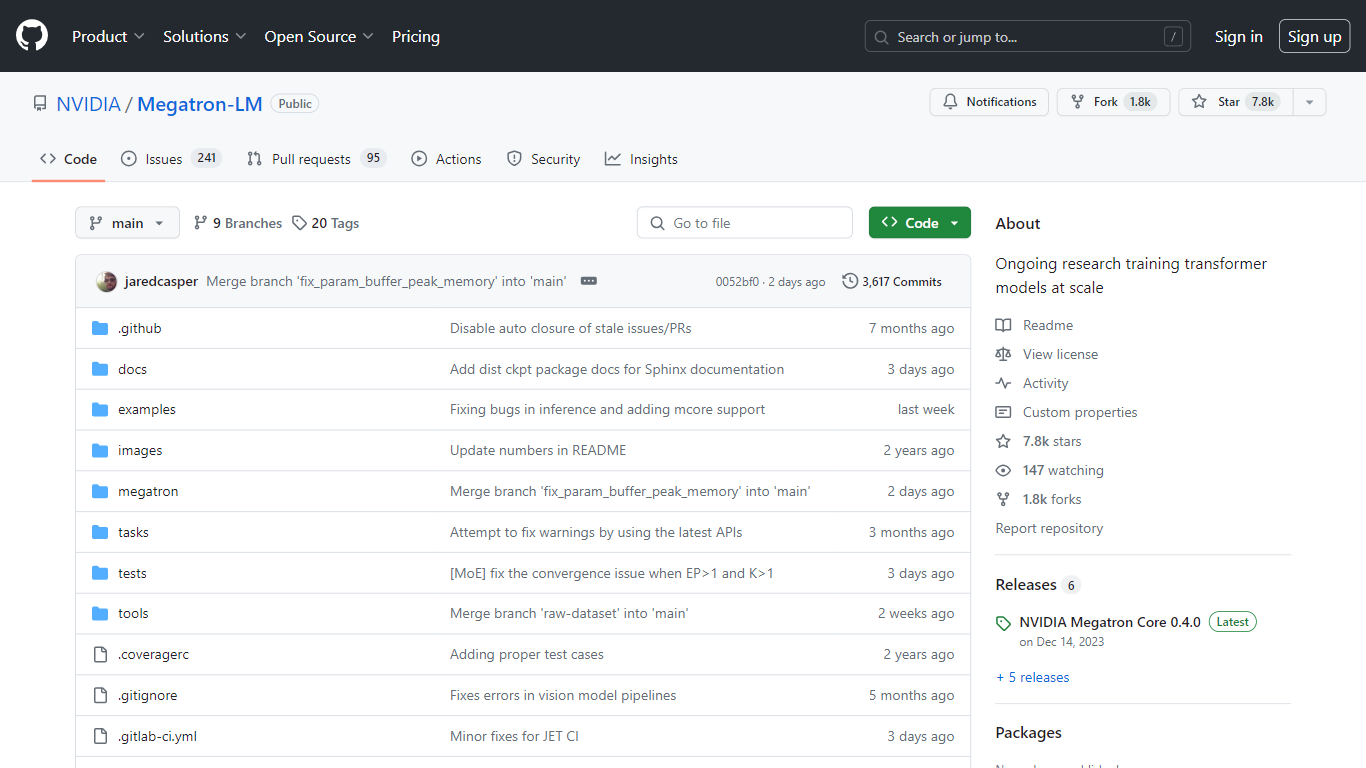

Megatron-LM

What is Megatron-LM?

NVIDIA's Megatron-LM repository on GitHub offers cutting-edge research and development for training transformer models on a massive scale. It represents the forefront of NVIDIA’s efforts in training large-scale language models with a focus on efficient, model-parallel, and multi-node pre-training methods, utilizing mixed precision for models such as GPT, BERT, and T5. The repository, open to the public, serves as a hub for sharing the advancements made by NVIDIA's Applied Deep Learning Research team and facilitates collaboration on expansive language model training.

With tools provided in this repository, developers and researchers can explore training transformer models with sizes ranging from billions to trillions of parameters, maximizing both model and hardware FLOPs utilization. Notably, the Megatron-LM's sophisticated training techniques have been used in a broad range of projects, from biomedical language models to large-scale generative dialog modeling, highlighting its versatility and robust application in the field of AI and machine learning.

GPT-4

What is GPT-4?

GPT-4 is the latest milestone in OpenAI’s effort in scaling up deep learning.

GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks. For example, it passes a simulated bar exam with a score around the top 10% of test takers; in contrast, GPT-3.5’s score was around the bottom 10%. We’ve spent 6 months iteratively aligning GPT-4 using lessons from our adversarial testing program as well as ChatGPT, resulting in our best-ever results (though far from perfect) on factuality, steerability, and refusing to go outside of guardrails.

GPT-4 is more creative and collaborative than ever before. It can generate, edit, and iterate with users on creative and technical writing tasks, such as composing songs, writing screenplays, or learning a user’s writing style.

Megatron-LM Upvotes

GPT-4 Upvotes

Megatron-LM Top Features

Large-Scale Training: Efficient model training for large transformer models, including GPT, BERT, and T5.

Model Parallelism: Model-parallel training methods such as tensor, sequence, and pipeline parallelism.

Mixed Precision: Use of mixed precision for efficient training and maximized utilization of computational resources.

Versatile Application: Demonstrated use in a wide range of projects and research advancements in natural language processing.

Benchmark Scaling Studies: Performance scaling results up to 1 trillion parameters, utilizing NVIDIA's Selene supercomputer and A100 GPUs for training.

GPT-4 Top Features

No top features listedMegatron-LM Category

- Large Language Model (LLM)

GPT-4 Category

- Large Language Model (LLM)

Megatron-LM Pricing Type

- Freemium

GPT-4 Pricing Type

- Freemium