Last updated 10-23-2025

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

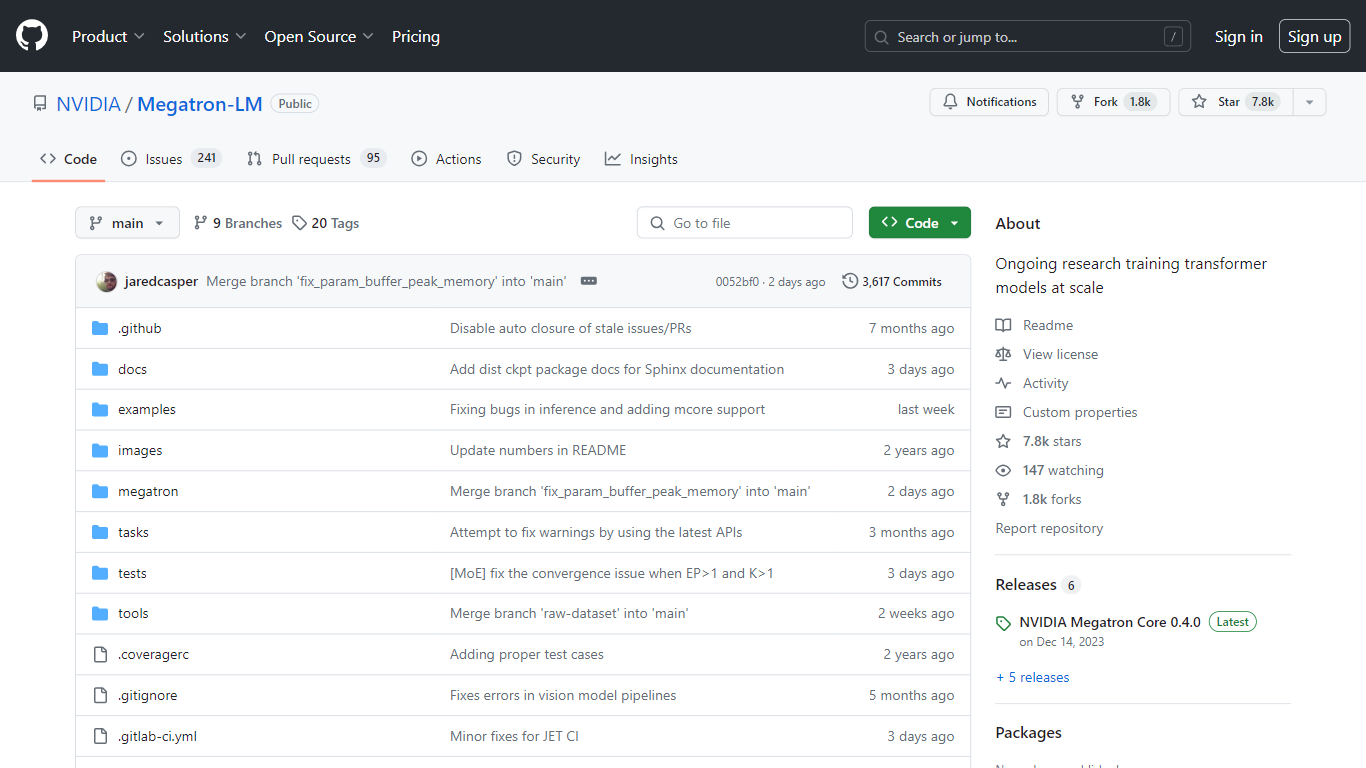

Megatron-LM

NVIDIA's Megatron-LM repository on GitHub offers cutting-edge research and development for training transformer models on a massive scale. It represents the forefront of NVIDIA’s efforts in training large-scale language models with a focus on efficient, model-parallel, and multi-node pre-training methods, utilizing mixed precision for models such as GPT, BERT, and T5. The repository, open to the public, serves as a hub for sharing the advancements made by NVIDIA's Applied Deep Learning Research team and facilitates collaboration on expansive language model training.

With tools provided in this repository, developers and researchers can explore training transformer models with sizes ranging from billions to trillions of parameters, maximizing both model and hardware FLOPs utilization. Notably, the Megatron-LM's sophisticated training techniques have been used in a broad range of projects, from biomedical language models to large-scale generative dialog modeling, highlighting its versatility and robust application in the field of AI and machine learning.

Large-Scale Training: Efficient model training for large transformer models, including GPT, BERT, and T5.

Model Parallelism: Model-parallel training methods such as tensor, sequence, and pipeline parallelism.

Mixed Precision: Use of mixed precision for efficient training and maximized utilization of computational resources.

Versatile Application: Demonstrated use in a wide range of projects and research advancements in natural language processing.

Benchmark Scaling Studies: Performance scaling results up to 1 trillion parameters, utilizing NVIDIA's Selene supercomputer and A100 GPUs for training.

What is Megatron-LM?

Megatron-LM is a large, powerful transformer model developed by NVIDIA's Applied Deep Learning Research team for ongoing research related to training large transformer language models at scale.

What can be found in the Megatron-LM repository?

Megatron-LM repository includes projects such as benchmarks, language model training at various scales, and demonstrations of model and hardware FLOPs utilization.

How does Megatron-LM achieve model parallelism?

The Megatron-LM implements model parallelism through tensor, sequence, and pipeline parallelism techniques.

What are the use cases of Megatron-LM?

Megatron-LM is used for large-scale transformer models, applicable in various fields, including dialogue modeling, question answering, and more.

What computational resources are used by Megatron-LM for training models?

Megatron-LM utilizes NVIDIA's Selene supercomputer and A100 GPUs to perform scaling studies and train models with up to 1 trillion parameters.