Megatron-LM vs Terracotta

In the battle of Megatron-LM vs Terracotta, which AI Large Language Model (LLM) tool comes out on top? We compare reviews, pricing, alternatives, upvotes, features, and more.

Between Megatron-LM and Terracotta, which one is superior?

Upon comparing Megatron-LM with Terracotta, which are both AI-powered large language model (llm) tools, There's no clear winner in terms of upvotes, as both tools have received the same number. Join the aitools.fyi users in deciding the winner by casting your vote.

Don't agree with the result? Cast your vote and be a part of the decision-making process!

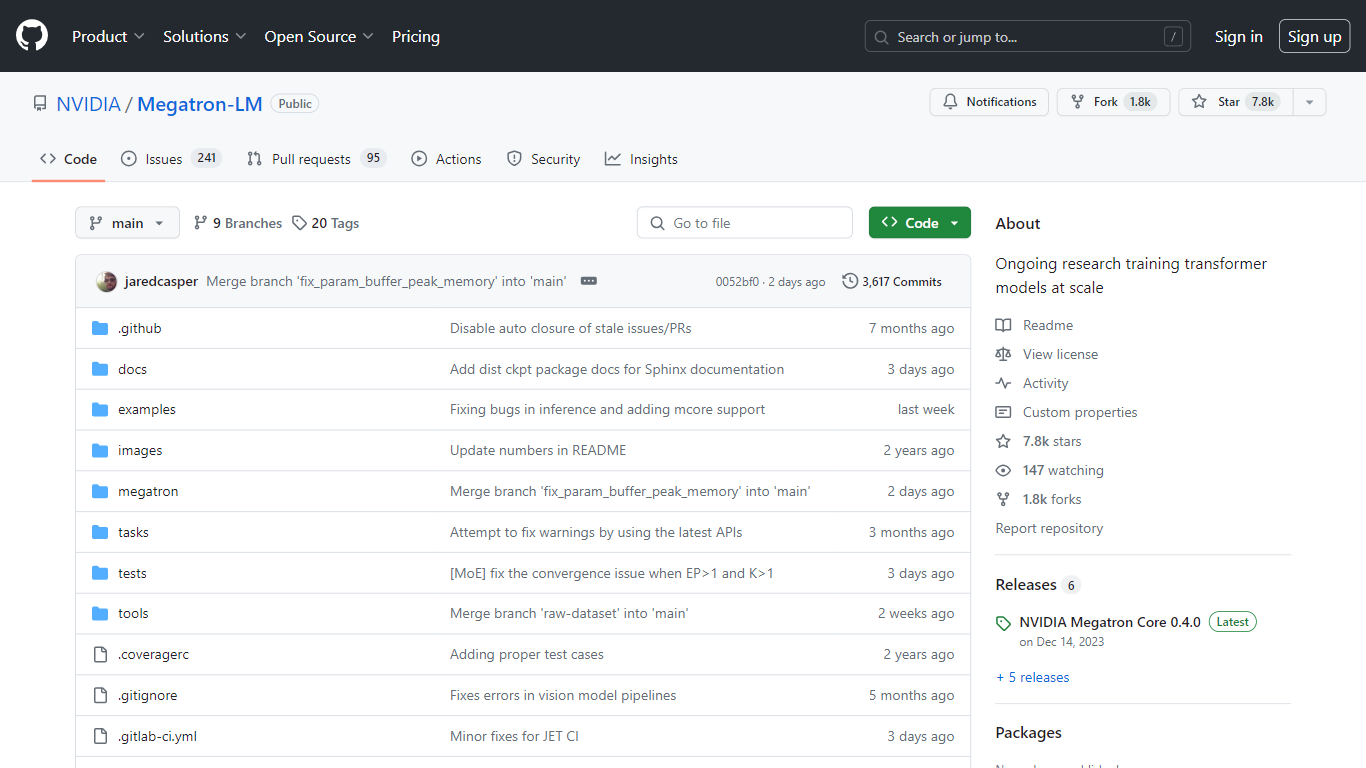

Megatron-LM

What is Megatron-LM?

NVIDIA's Megatron-LM repository on GitHub offers cutting-edge research and development for training transformer models on a massive scale. It represents the forefront of NVIDIA’s efforts in training large-scale language models with a focus on efficient, model-parallel, and multi-node pre-training methods, utilizing mixed precision for models such as GPT, BERT, and T5. The repository, open to the public, serves as a hub for sharing the advancements made by NVIDIA's Applied Deep Learning Research team and facilitates collaboration on expansive language model training.

With tools provided in this repository, developers and researchers can explore training transformer models with sizes ranging from billions to trillions of parameters, maximizing both model and hardware FLOPs utilization. Notably, the Megatron-LM's sophisticated training techniques have been used in a broad range of projects, from biomedical language models to large-scale generative dialog modeling, highlighting its versatility and robust application in the field of AI and machine learning.

Terracotta

What is Terracotta?

Terracotta is a cutting-edge platform designed to enhance the workflow for developers and researchers working with large language models (LLMs). This intuitive and user-friendly platform allows you to manage, iterate, and evaluate your fine-tuned models with ease. With Terracotta, you can securely upload data, fine-tune models for various tasks like classification and text generation, and create comprehensive evaluations to compare model performance using both qualitative and quantitative metrics. Our tool supports connections to major providers like OpenAI and Cohere, ensuring you have access to a broad range of LLM capabilities. Terracotta is the creation of Beri Kohen and Lucas Pauker, AI enthusiasts and Stanford graduates, who are dedicated to advancing LLM development. Join our email list to stay informed on the latest updates and features that Terracotta has to offer.

Megatron-LM Upvotes

Terracotta Upvotes

Megatron-LM Top Features

Large-Scale Training: Efficient model training for large transformer models, including GPT, BERT, and T5.

Model Parallelism: Model-parallel training methods such as tensor, sequence, and pipeline parallelism.

Mixed Precision: Use of mixed precision for efficient training and maximized utilization of computational resources.

Versatile Application: Demonstrated use in a wide range of projects and research advancements in natural language processing.

Benchmark Scaling Studies: Performance scaling results up to 1 trillion parameters, utilizing NVIDIA's Selene supercomputer and A100 GPUs for training.

Terracotta Top Features

Manage Many Models: Centrally handle all your fine-tuned models in one convenient place.

Iterate Quickly: Streamline the process of model improvement with fast qualitative and quantitative evaluations.

Multiple Providers: Seamlessly integrate with services from OpenAI and Cohere to supercharge your development process.

Upload Your Data: Upload and securely store your datasets for the fine-tuning of models.

Create Evaluations: Conduct in-depth comparative assessments of model performances leveraging metrics like accuracy BLEU and confusion matrices.

Megatron-LM Category

- Large Language Model (LLM)

Terracotta Category

- Large Language Model (LLM)

Megatron-LM Pricing Type

- Freemium

Terracotta Pricing Type

- Freemium