StabilityAI's Stable Diffusion v2-1 vs Drag Your GAN

Dive into the comparison of StabilityAI's Stable Diffusion v2-1 vs Drag Your GAN and discover which AI Image Generation Model tool stands out. We examine alternatives, upvotes, features, reviews, pricing, and beyond.

When comparing StabilityAI's Stable Diffusion v2-1 and Drag Your GAN, which one rises above the other?

When we compare StabilityAI's Stable Diffusion v2-1 and Drag Your GAN, two exceptional image generation model tools powered by artificial intelligence, and place them side by side, several key similarities and differences come to light. In the race for upvotes, Drag Your GAN takes the trophy. Drag Your GAN has received 8 upvotes from aitools.fyi users, while StabilityAI's Stable Diffusion v2-1 has received 6 upvotes.

You don't agree with the result? Cast your vote to help us decide!

StabilityAI's Stable Diffusion v2-1

What is StabilityAI's Stable Diffusion v2-1?

Embark on a groundbreaking journey with StabilityAI, where the fusion of open source and open science propels the democratization of artificial intelligence. Discover the Stable Diffusion v2-1 model, rigorously fine-tuned and ready to empower your creative and research endeavors in unprecedented ways. Immerse yourself in the world of text-to-image generation, where your prompts come to life through vivid images synthesized by advanced diffusion models.

Delve into the intricacies of Stable Diffusion v2-1, crafted by a team of experts including Robin Rombach and Patrick Esser. Leveraging a Latent Diffusion Model equipped with a pretrained text encoder, this AI marvel enables you to explore the realms of artistic creation, safe content deployment, and the understanding of AI biases and limitations. Ready to engage with the transformative power of AI? Connect with StabilityAI's Stable Diffusion v2-1 model today.

Drag Your GAN

What is Drag Your GAN?

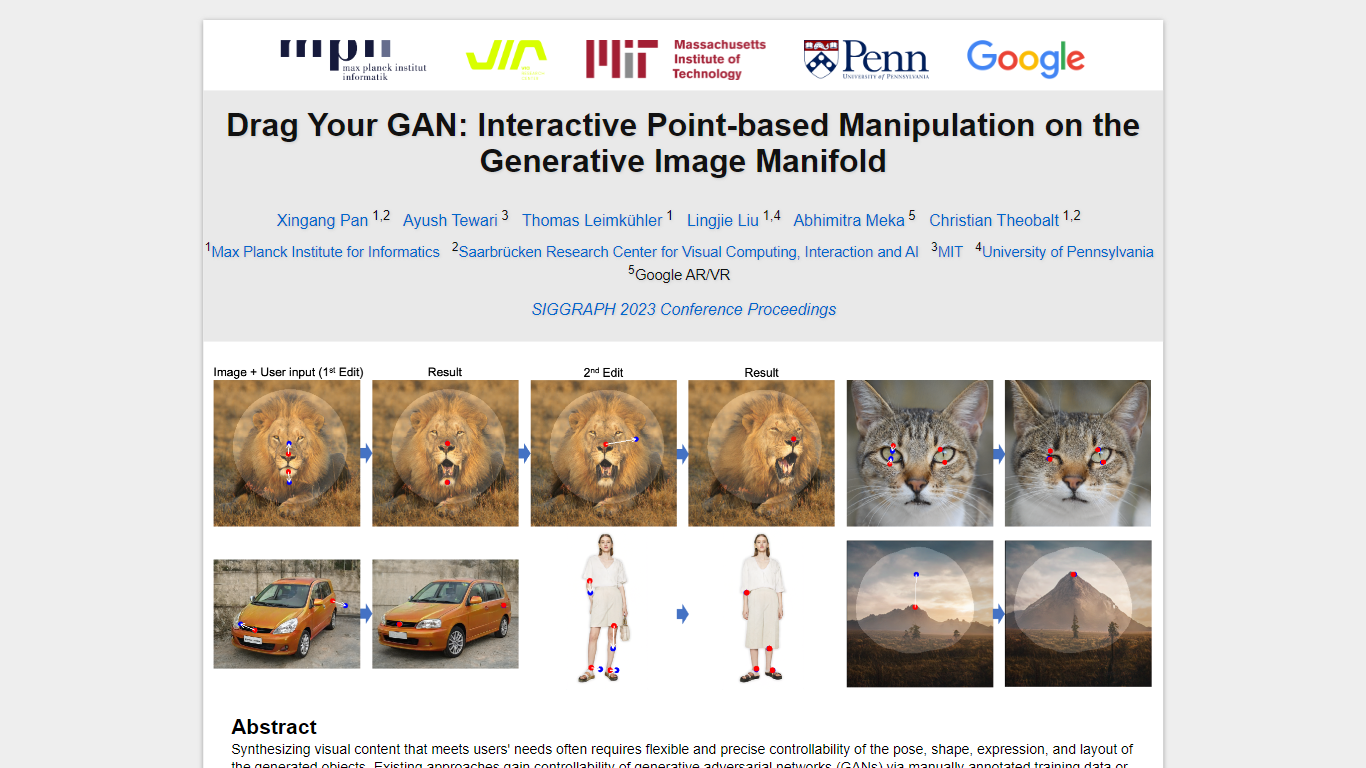

In the realm of synthesizing visual content to meet users' needs, achieving precise control over pose, shape, expression, and layout of generated objects is essential. Traditional approaches to controlling generative adversarial networks (GANs) have relied on manual annotations during training or prior 3D models, often lacking the flexibility, precision, and versatility required for diverse applications.

In our research, we explore an innovative and relatively uncharted method for GAN control – the ability to "drag" specific image points to precisely reach user-defined target points in an interactive manner (as illustrated in Fig.1). This approach has led to the development of DragGAN, a novel framework comprising two core components:

Feature-Based Motion Supervision: This component guides handle points within the image toward their intended target positions through feature-based motion supervision.

Point Tracking: Leveraging discriminative GAN features, our new point tracking technique continuously localizes the position of handle points.

DragGAN empowers users to deform images with remarkable precision, enabling manipulation of the pose, shape, expression, and layout across diverse categories such as animals, cars, humans, landscapes, and more. These manipulations take place within the learned generative image manifold of a GAN, resulting in realistic outputs, even in complex scenarios like generating occluded content and deforming shapes while adhering to the object's rigidity.

Our comprehensive evaluations, encompassing both qualitative and quantitative comparisons, highlight DragGAN's superiority over existing methods in tasks related to image manipulation and point tracking. Additionally, we demonstrate its capabilities in manipulating real-world images through GAN inversion, showcasing its potential for various practical applications in the realm of visual content synthesis and control.

StabilityAI's Stable Diffusion v2-1 Upvotes

Drag Your GAN Upvotes

StabilityAI's Stable Diffusion v2-1 Top Features

High-Resolution Image Synthesis: Generate exquisite images using the advanced text-to-image model Stable Diffusion v2-1.

Research-Oriented: The model targets research applications including probing AI limitations and bias and safe content deployment.

Artistic and Educational Applications: Harness AI to generate artworks or utilize as a tool in design and creative processes.

Advanced Training Techniques: Utilizes LAION datasets and innovative training procedures including cross-attention and v-objective loss.

Open Source Licensing: Ensures the freedom to use modify and distribute the model with the CreativeML Open RAIL++-M License.

Drag Your GAN Top Features

No top features listedStabilityAI's Stable Diffusion v2-1 Category

- Image Generation Model

Drag Your GAN Category

- Image Generation Model

StabilityAI's Stable Diffusion v2-1 Pricing Type

- Freemium

Drag Your GAN Pricing Type

- Free