AIML API vs ggml.ai

Compare AIML API vs ggml.ai and see which AI Large Language Model (LLM) tool is better when we compare features, reviews, pricing, alternatives, upvotes, etc.

Which one is better? AIML API or ggml.ai?

When we compare AIML API with ggml.ai, which are both AI-powered large language model (llm) tools, AIML API is the clear winner in terms of upvotes. AIML API has been upvoted 7 times by aitools.fyi users, and ggml.ai has been upvoted 6 times.

Does the result make you go "hmm"? Cast your vote and turn that frown upside down!

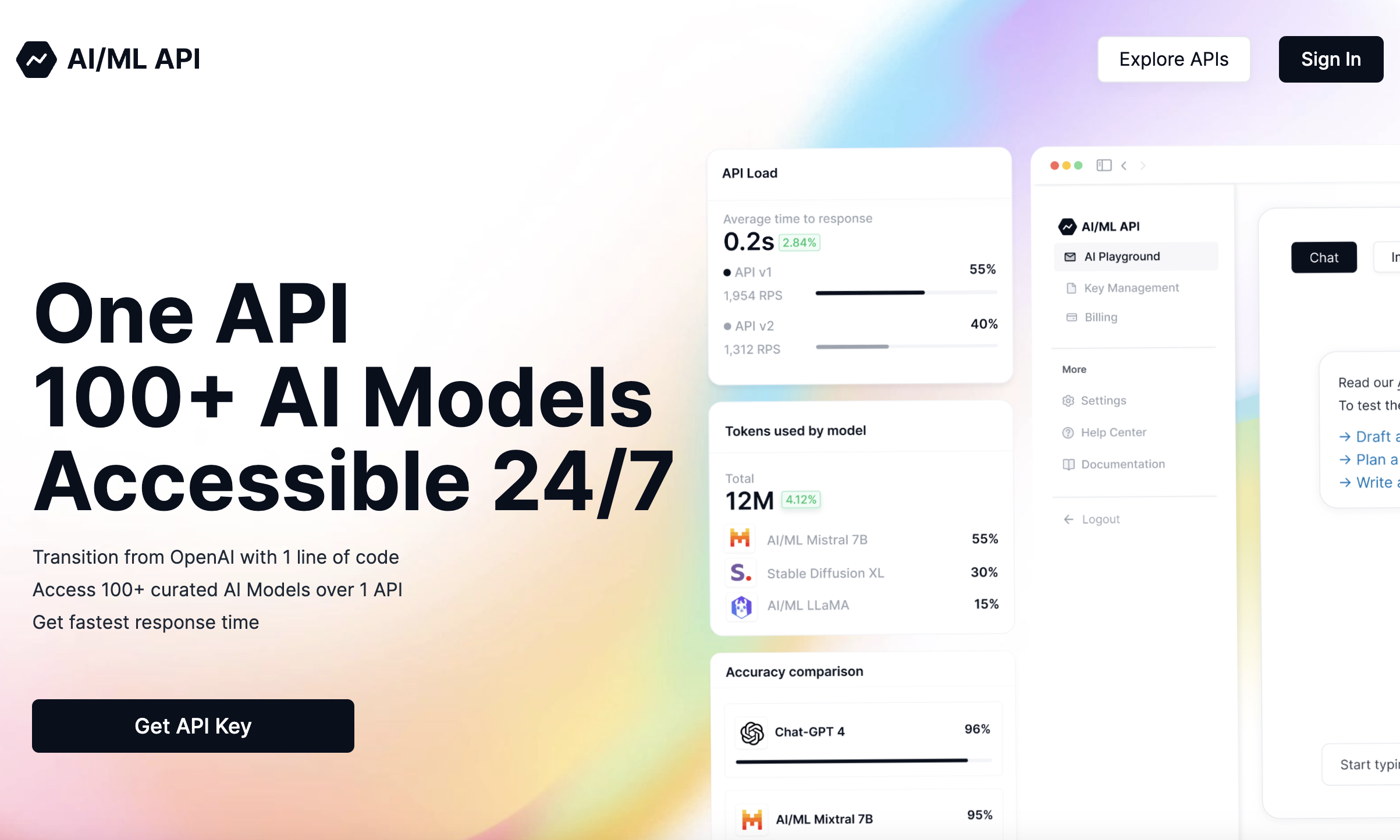

AIML API

What is AIML API?

aimlapi.com provides a robust solution for integrating a vast array of AI models through a singular API, offering unprecedented access to over 100 AI models around the clock. This platform stands as a competitive alternative to OpenAI, facilitating a seamless transition with just one line of code modification. It assures users of low-latency responses and cost savings of up to 80% compared to using OpenAI services.

With a strong emphasis on serving developers, aimlapi.com is designed to be both developer-friendly and budget-conscious, offering an OpenAI-compatible API structure and serverless inference to mitigate deployment and maintenance costs. The offered models encompass a wide range of utilities including Chat, Language, Image, Code, and Embedding capabilities, all while guaranteeing a high uptime, fast response times, and reliable performance.

Additionally, the API's pricing model is straightforward and predictable, with the monthly subscription covering 10M tokens, comparable to processing the entire Harry Potter book series ten times. aimlapi.com is dedicated to transparency and advanced tracking, allowing for precise monitoring of API calls and token expenditure. Whether it's for prototyping or full-scale production, aimlapi.com ensures accessible and powerful AI solutions for every developer.

ggml.ai

What is ggml.ai?

ggml.ai is at the forefront of AI technology, bringing powerful machine learning capabilities directly to the edge with its innovative tensor library. Built for large model support and high performance on common hardware platforms, ggml.ai enables developers to implement advanced AI algorithms without the need for specialized equipment. The platform, written in the efficient C programming language, offers 16-bit float and integer quantization support, along with automatic differentiation and various built-in optimization algorithms like ADAM and L-BFGS. It boasts optimized performance for Apple Silicon and leverages AVX/AVX2 intrinsics on x86 architectures. Web-based applications can also exploit its capabilities via WebAssembly and WASM SIMD support. With its zero runtime memory allocations and absence of third-party dependencies, ggml.ai presents a minimal and efficient solution for on-device inference.

Projects like whisper.cpp and llama.cpp demonstrate the high-performance inference capabilities of ggml.ai, with whisper.cpp providing speech-to-text solutions and llama.cpp focusing on efficient inference of Meta's LLaMA large language model. Moreover, the company welcomes contributions to its codebase and supports an open-core development model through the MIT license. As ggml.ai continues to expand, it seeks talented full-time developers with a shared vision for on-device inference to join their team.

Designed to push the envelope of AI at the edge, ggml.ai is a testament to the spirit of play and innovation in the AI community.

AIML API Upvotes

ggml.ai Upvotes

AIML API Top Features

Serverless Inference: Eliminate deployment and maintenance hassles, focusing solely on innovation.

Swift Transition from OpenAI: Easily migrate from OpenAI with minimal changes, making the switch highly convenient.

100+ AI Models: Access a diverse range of AI models through one API, ensuring versatility and richness in applications.

Cost Savings: Enjoy significant cost reductions, with the platform claiming to be 80% cheaper than OpenAI for similar levels of accuracy and speed.

Predictable Pricing: Benefit from straightforward and flat-rate pricing that ranks as some of the most competitive in the market.

Uptime and Speed: Rely on a 99% uptime guarantee with rapid response times, even under heavy loads.

Reliability & Accessibility: Rely on us for consistent, top-notch performance at every turn.

ggml.ai Top Features

Written in C: Ensures high performance and compatibility across a range of platforms.

Optimization for Apple Silicon: Delivers efficient processing and lower latency on Apple devices.

Support for WebAssembly and WASM SIMD: Facilitates web applications to utilize machine learning capabilities.

No Third-Party Dependencies: Makes for an uncluttered codebase and convenient deployment.

Guided Language Output Support: Enhances human-computer interaction with more intuitive AI-generated responses.

AIML API Category

- Large Language Model (LLM)

ggml.ai Category

- Large Language Model (LLM)

AIML API Pricing Type

- Freemium

ggml.ai Pricing Type

- Freemium