fastchat vs ggml.ai

When comparing fastchat vs ggml.ai, which AI Large Language Model (LLM) tool shines brighter? We look at pricing, alternatives, upvotes, features, reviews, and more.

In a comparison between fastchat and ggml.ai, which one comes out on top?

When we put fastchat and ggml.ai side by side, both being AI-powered large language model (llm) tools, The upvote count reveals a draw, with both tools earning the same number of upvotes. Since other aitools.fyi users could decide the winner, the ball is in your court now to cast your vote and help us determine the winner.

Think we got it wrong? Cast your vote and show us who's boss!

fastchat

What is fastchat?

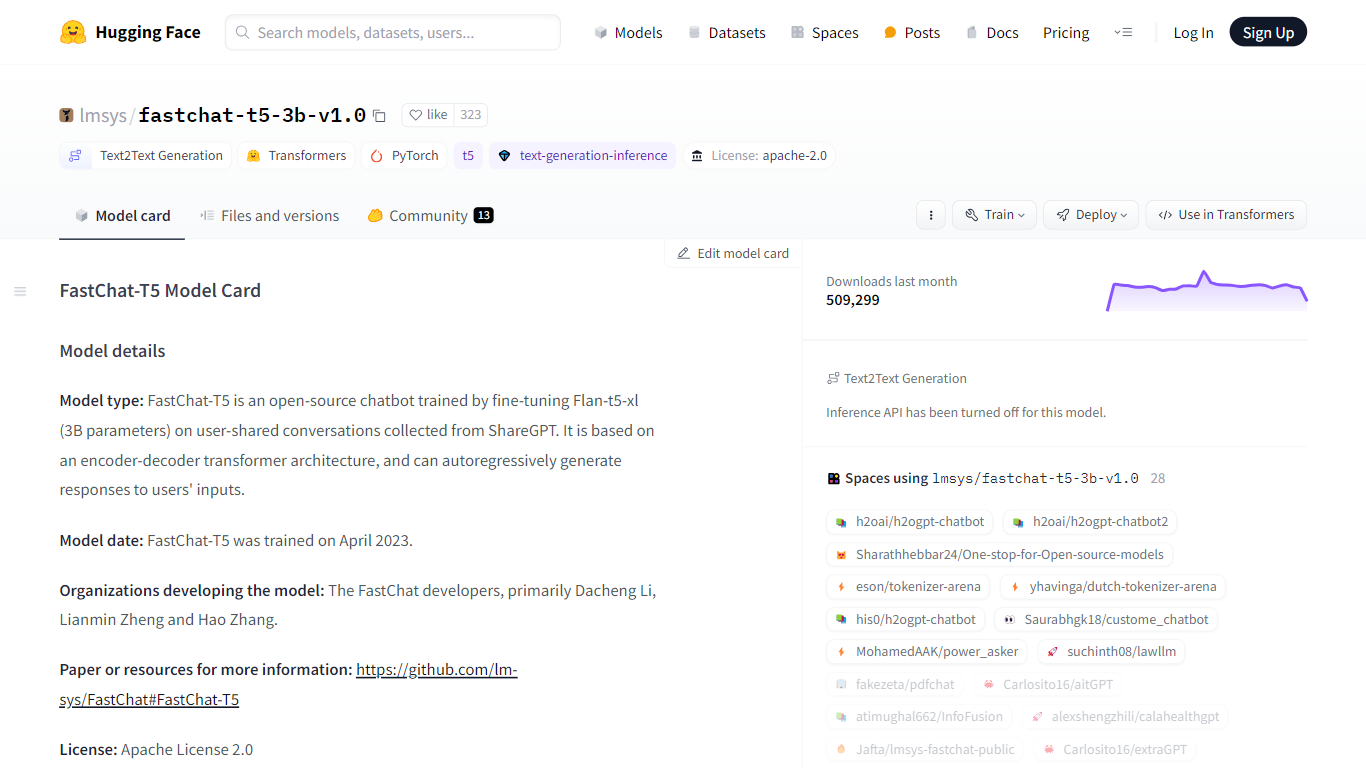

The lmsys/fastchat-t5-3b-v1.0 model, hosted on the Hugging Face platform, is a cutting-edge artificial intelligence solution designed to elevate chatbot interactions to new heights of fluency and coherence. This model is created by utilizing the power of Flan-T5 with a staggering 3 billion parameters, fine-tuned on conversations sourced from ShareGPT. Not only does it provide an impeccable foundation for developing dynamic and responsive chatbots for commercial applications, but it is also a vital resource for researchers delving into the intricacies of natural language processing and machine learning. Developed in April 2023 by the FastChat team, led by Dacheng Li, Lianmin Zheng, and Hao Zhang, this model achieves unparalleled language understanding and generation capabilities by implementing an encoder-decoder transformer architecture.

It has been meticulously trained on a dataset of 70,000 conversations, ensuring a broad understanding of various prompts and queries. The model has undergone rigorous testing, including a preliminary evaluation with GPT-4, showcasing its potential to provide informative and conversationally relevant responses. Due to its open-source status under the Apache 2.0 License, the model invites collaboration and innovation, making it a beacon for open science and AI democratization.

ggml.ai

What is ggml.ai?

ggml.ai is at the forefront of AI technology, bringing powerful machine learning capabilities directly to the edge with its innovative tensor library. Built for large model support and high performance on common hardware platforms, ggml.ai enables developers to implement advanced AI algorithms without the need for specialized equipment. The platform, written in the efficient C programming language, offers 16-bit float and integer quantization support, along with automatic differentiation and various built-in optimization algorithms like ADAM and L-BFGS. It boasts optimized performance for Apple Silicon and leverages AVX/AVX2 intrinsics on x86 architectures. Web-based applications can also exploit its capabilities via WebAssembly and WASM SIMD support. With its zero runtime memory allocations and absence of third-party dependencies, ggml.ai presents a minimal and efficient solution for on-device inference.

Projects like whisper.cpp and llama.cpp demonstrate the high-performance inference capabilities of ggml.ai, with whisper.cpp providing speech-to-text solutions and llama.cpp focusing on efficient inference of Meta's LLaMA large language model. Moreover, the company welcomes contributions to its codebase and supports an open-core development model through the MIT license. As ggml.ai continues to expand, it seeks talented full-time developers with a shared vision for on-device inference to join their team.

Designed to push the envelope of AI at the edge, ggml.ai is a testament to the spirit of play and innovation in the AI community.

fastchat Upvotes

ggml.ai Upvotes

fastchat Top Features

Model Architecture: Open-source chatbot employing encoder-decoder transformer architecture from Flan-t5-xl.

Training Data: Finely tuned on 70K conversations collected from ShareGPT for diversified interactions.

Development Team: Brought to life by FastChat developers Dacheng Li, Lianmin Zheng, and Hao Zhang for state-of-the-art language processing.

Commercial and Research Application: Ideal for entrepreneurs and researchers with interests in NLP, ML, and AI.

License and Access: Accessibility and innovation promoted through the Apache License 2.0 for open-source development.

ggml.ai Top Features

Written in C: Ensures high performance and compatibility across a range of platforms.

Optimization for Apple Silicon: Delivers efficient processing and lower latency on Apple devices.

Support for WebAssembly and WASM SIMD: Facilitates web applications to utilize machine learning capabilities.

No Third-Party Dependencies: Makes for an uncluttered codebase and convenient deployment.

Guided Language Output Support: Enhances human-computer interaction with more intuitive AI-generated responses.

fastchat Category

- Large Language Model (LLM)

ggml.ai Category

- Large Language Model (LLM)

fastchat Pricing Type

- Freemium

ggml.ai Pricing Type

- Freemium