GLM-130B vs Stellaris AI

When comparing GLM-130B vs Stellaris AI, which AI Large Language Model (LLM) tool shines brighter? We look at pricing, alternatives, upvotes, features, reviews, and more.

In a comparison between GLM-130B and Stellaris AI, which one comes out on top?

When we put GLM-130B and Stellaris AI side by side, both being AI-powered large language model (llm) tools, The community has spoken, GLM-130B leads with more upvotes. GLM-130B has received 7 upvotes from aitools.fyi users, while Stellaris AI has received 6 upvotes.

Feeling rebellious? Cast your vote and shake things up!

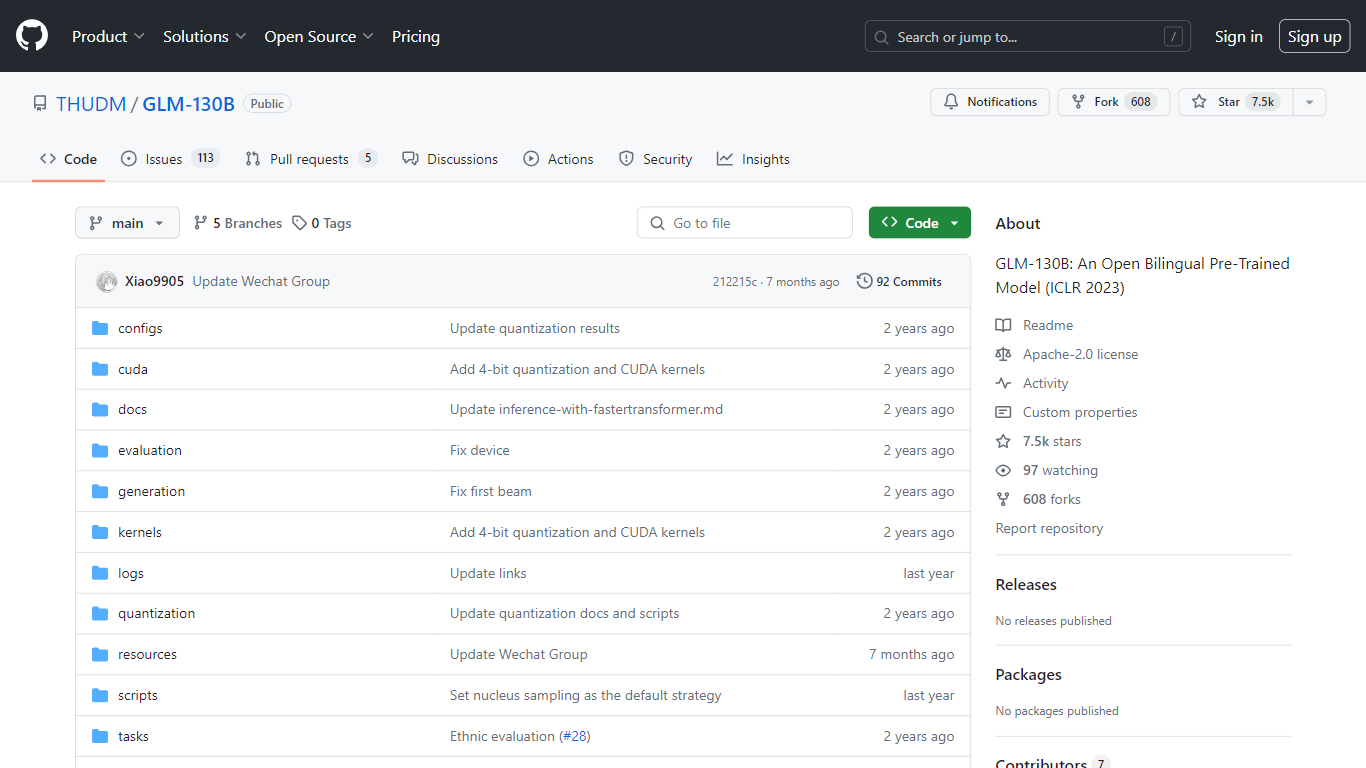

GLM-130B

What is GLM-130B?

GLM-130B, showcased at ICLR 2023, represents a groundbreaking open bilingual pre-trained model that stands out with its impressive 130 billion parameters. Developed for bidirectional dense modeling in both English and Chinese, the GLM-130B leverages the General Language Model (GLM) algorithm for pre-training and is optimized to run inference tasks on a single server setup whether it be the A100 (40G * 8) or the V100 (32G * 8). Furthermore, its compatibility with INT4 quantization means that the already modest hardware requirements can be reduced even further, allowing a server with 4 * RTX 3090 (24G) to support the model with minimal performance degradation.

As part of its training process, the GLM-130B has digested an extensive dataset consisting of over 400 billion text tokens, equally divided between Chinese and English. It boasts exceptional bilingual support, superior performance across various datasets when compared to its counterparts, and offers fast inference times. Additionally, this repository also promotes reproducibility by facilitating open-source code and model checkpoints for over 30 tasks.

Stellaris AI

What is Stellaris AI?

Join the forefront of AI technology with Stellaris AI's mission to create groundbreaking Native-Safe Large Language Models. At Stellaris AI, we prioritize safety and utility in our advanced SGPT-2.5 models, designed for general-purpose applications. We invite you to be part of this innovative journey by joining our waitlist. Our commitment to cutting-edge AI development is reflected in our dedication to native safety, ensuring our models provide reliable and secure performance across various domains. Stellaris AI is shaping the future of digital intelligence, and by joining us, you'll have early access to the SGPT-2.5, a product that promises to revolutionize the way we interact with technology. Don't miss the chance to collaborate with a community of forward-thinkers — submit your interest, and become a part of AI's evolution today.

GLM-130B Upvotes

Stellaris AI Upvotes

GLM-130B Top Features

Bilingual Support: GLM-130B caters to both English and Chinese language models.

High Performance: Comprehensive benchmarks show GLM-130B outperforming rival models across diverse datasets.

Fast Inference: Utilizes SAT and FasterTransformer for rapid inference on a single A100 server.

Reproducibility: Consistent results across more than 30 tasks, thanks to open-source code and model checkpoints.

Cross-Platform Compatibility: Accommodates a range of platforms including NVIDIA, Hygon DCU, Ascend 910, and Sunway.

Stellaris AI Top Features

Native Safety: Provides reliable and secure performance for AI applications.

General Purpose: Designed to be versatile across a wide range of domains.

Innovation: At the cutting edge of Large Language Model development.

Community: Join a forward-thinking community invested in AI progress.

Early Access: Opportunity to access the advanced SGPT-2.5 model before general release.

GLM-130B Category

- Large Language Model (LLM)

Stellaris AI Category

- Large Language Model (LLM)

GLM-130B Pricing Type

- Free

Stellaris AI Pricing Type

- Freemium