Last updated 10-23-2025

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

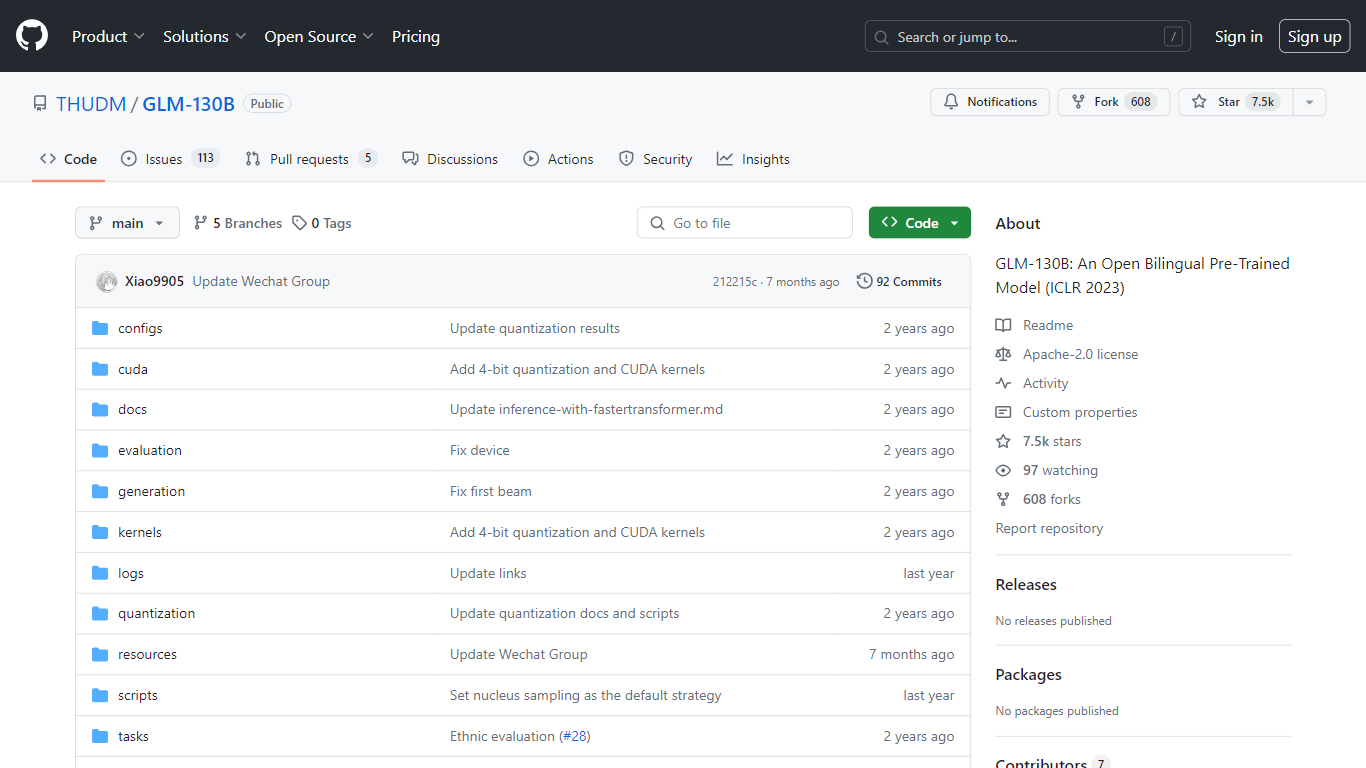

GLM-130B

GLM-130B, showcased at ICLR 2023, represents a groundbreaking open bilingual pre-trained model that stands out with its impressive 130 billion parameters. Developed for bidirectional dense modeling in both English and Chinese, the GLM-130B leverages the General Language Model (GLM) algorithm for pre-training and is optimized to run inference tasks on a single server setup whether it be the A100 (40G * 8) or the V100 (32G * 8). Furthermore, its compatibility with INT4 quantization means that the already modest hardware requirements can be reduced even further, allowing a server with 4 * RTX 3090 (24G) to support the model with minimal performance degradation.

As part of its training process, the GLM-130B has digested an extensive dataset consisting of over 400 billion text tokens, equally divided between Chinese and English. It boasts exceptional bilingual support, superior performance across various datasets when compared to its counterparts, and offers fast inference times. Additionally, this repository also promotes reproducibility by facilitating open-source code and model checkpoints for over 30 tasks.

Bilingual Support: GLM-130B caters to both English and Chinese language models.

High Performance: Comprehensive benchmarks show GLM-130B outperforming rival models across diverse datasets.

Fast Inference: Utilizes SAT and FasterTransformer for rapid inference on a single A100 server.

Reproducibility: Consistent results across more than 30 tasks, thanks to open-source code and model checkpoints.

Cross-Platform Compatibility: Accommodates a range of platforms including NVIDIA, Hygon DCU, Ascend 910, and Sunway.

What is GLM-130B?

GLM-130B is a bilingual, bidirectional dense model with 130 billion parameters, pre-trained using the General Language Model (GLM) algorithm.

How much data was GLM-130B trained on?

The model was trained on over 400 billion text tokens, with 200 billion each for Chinese and English text.

Can the results produced by GLM-130B be reproduced?

Yes, all results across more than 30 tasks can be easily reproduced using provided open-source code and model checkpoints.

Does GLM-130B support multiple hardware platforms?

GLM-130B supports not only NVIDIA but also Hygon DCU, Ascend 910, and soon, Sunway platforms for training and inference.

What is the main focus of the GLM-130B repository?

The repository mainly focuses on the evaluation of GLM-130B, supporting fast model inference and reproducibility of results.