GLM-130B vs ggml.ai

When comparing GLM-130B vs ggml.ai, which AI Large Language Model (LLM) tool shines brighter? We look at pricing, alternatives, upvotes, features, reviews, and more.

Between GLM-130B and ggml.ai, which one is superior?

When we put GLM-130B and ggml.ai side by side, both being AI-powered large language model (llm) tools, GLM-130B is the clear winner in terms of upvotes. GLM-130B has been upvoted 7 times by aitools.fyi users, and ggml.ai has been upvoted 6 times.

Not your cup of tea? Upvote your preferred tool and stir things up!

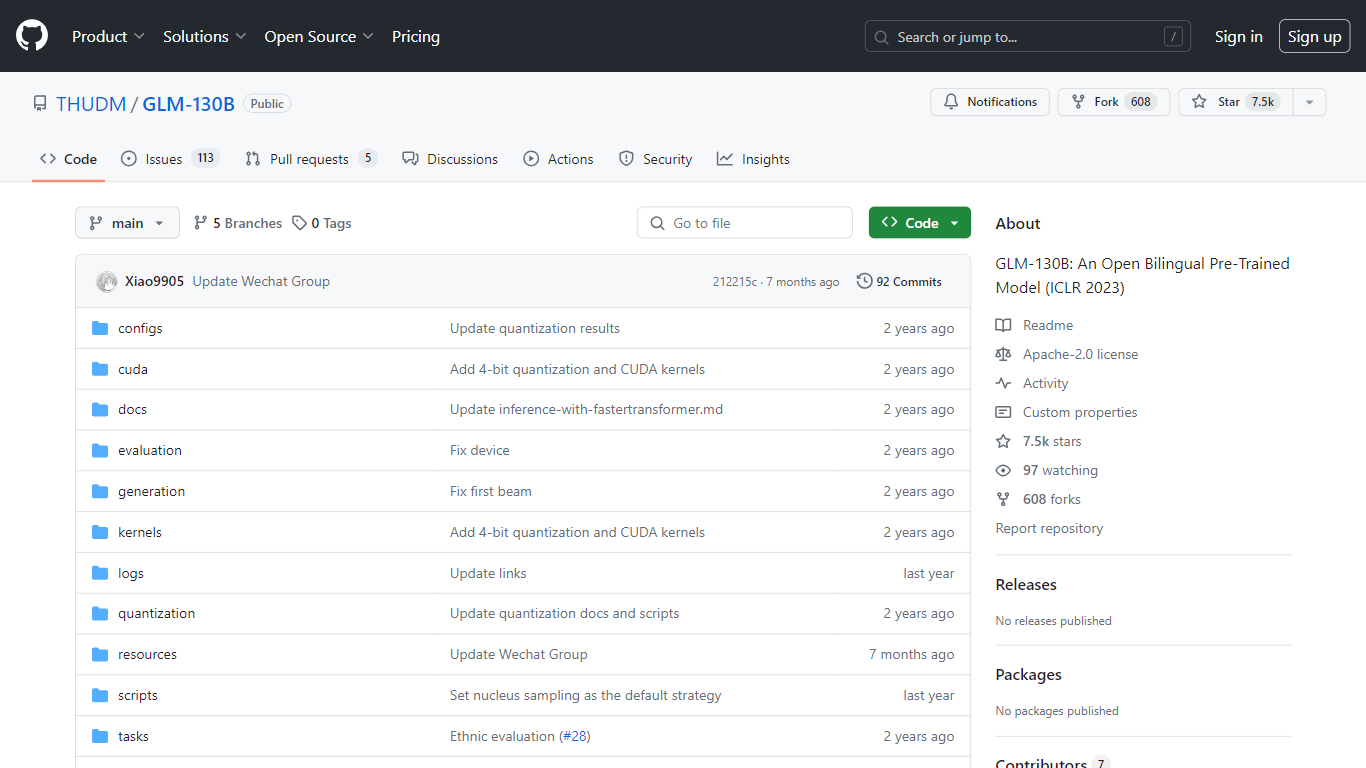

GLM-130B

What is GLM-130B?

GLM-130B, showcased at ICLR 2023, represents a groundbreaking open bilingual pre-trained model that stands out with its impressive 130 billion parameters. Developed for bidirectional dense modeling in both English and Chinese, the GLM-130B leverages the General Language Model (GLM) algorithm for pre-training and is optimized to run inference tasks on a single server setup whether it be the A100 (40G * 8) or the V100 (32G * 8). Furthermore, its compatibility with INT4 quantization means that the already modest hardware requirements can be reduced even further, allowing a server with 4 * RTX 3090 (24G) to support the model with minimal performance degradation.

As part of its training process, the GLM-130B has digested an extensive dataset consisting of over 400 billion text tokens, equally divided between Chinese and English. It boasts exceptional bilingual support, superior performance across various datasets when compared to its counterparts, and offers fast inference times. Additionally, this repository also promotes reproducibility by facilitating open-source code and model checkpoints for over 30 tasks.

ggml.ai

What is ggml.ai?

ggml.ai is at the forefront of AI technology, bringing powerful machine learning capabilities directly to the edge with its innovative tensor library. Built for large model support and high performance on common hardware platforms, ggml.ai enables developers to implement advanced AI algorithms without the need for specialized equipment. The platform, written in the efficient C programming language, offers 16-bit float and integer quantization support, along with automatic differentiation and various built-in optimization algorithms like ADAM and L-BFGS. It boasts optimized performance for Apple Silicon and leverages AVX/AVX2 intrinsics on x86 architectures. Web-based applications can also exploit its capabilities via WebAssembly and WASM SIMD support. With its zero runtime memory allocations and absence of third-party dependencies, ggml.ai presents a minimal and efficient solution for on-device inference.

Projects like whisper.cpp and llama.cpp demonstrate the high-performance inference capabilities of ggml.ai, with whisper.cpp providing speech-to-text solutions and llama.cpp focusing on efficient inference of Meta's LLaMA large language model. Moreover, the company welcomes contributions to its codebase and supports an open-core development model through the MIT license. As ggml.ai continues to expand, it seeks talented full-time developers with a shared vision for on-device inference to join their team.

Designed to push the envelope of AI at the edge, ggml.ai is a testament to the spirit of play and innovation in the AI community.

GLM-130B Upvotes

ggml.ai Upvotes

GLM-130B Top Features

Bilingual Support: GLM-130B caters to both English and Chinese language models.

High Performance: Comprehensive benchmarks show GLM-130B outperforming rival models across diverse datasets.

Fast Inference: Utilizes SAT and FasterTransformer for rapid inference on a single A100 server.

Reproducibility: Consistent results across more than 30 tasks, thanks to open-source code and model checkpoints.

Cross-Platform Compatibility: Accommodates a range of platforms including NVIDIA, Hygon DCU, Ascend 910, and Sunway.

ggml.ai Top Features

Written in C: Ensures high performance and compatibility across a range of platforms.

Optimization for Apple Silicon: Delivers efficient processing and lower latency on Apple devices.

Support for WebAssembly and WASM SIMD: Facilitates web applications to utilize machine learning capabilities.

No Third-Party Dependencies: Makes for an uncluttered codebase and convenient deployment.

Guided Language Output Support: Enhances human-computer interaction with more intuitive AI-generated responses.

GLM-130B Category

- Large Language Model (LLM)

ggml.ai Category

- Large Language Model (LLM)

GLM-130B Pricing Type

- Free

ggml.ai Pricing Type

- Freemium