GLM-130B vs Terracotta

In the clash of GLM-130B vs Terracotta, which AI Large Language Model (LLM) tool emerges victorious? We assess reviews, pricing, alternatives, features, upvotes, and more.

When we put GLM-130B and Terracotta head to head, which one emerges as the victor?

Let's take a closer look at GLM-130B and Terracotta, both of which are AI-driven large language model (llm) tools, and see what sets them apart. GLM-130B is the clear winner in terms of upvotes. GLM-130B has received 7 upvotes from aitools.fyi users, while Terracotta has received 6 upvotes.

Not your cup of tea? Upvote your preferred tool and stir things up!

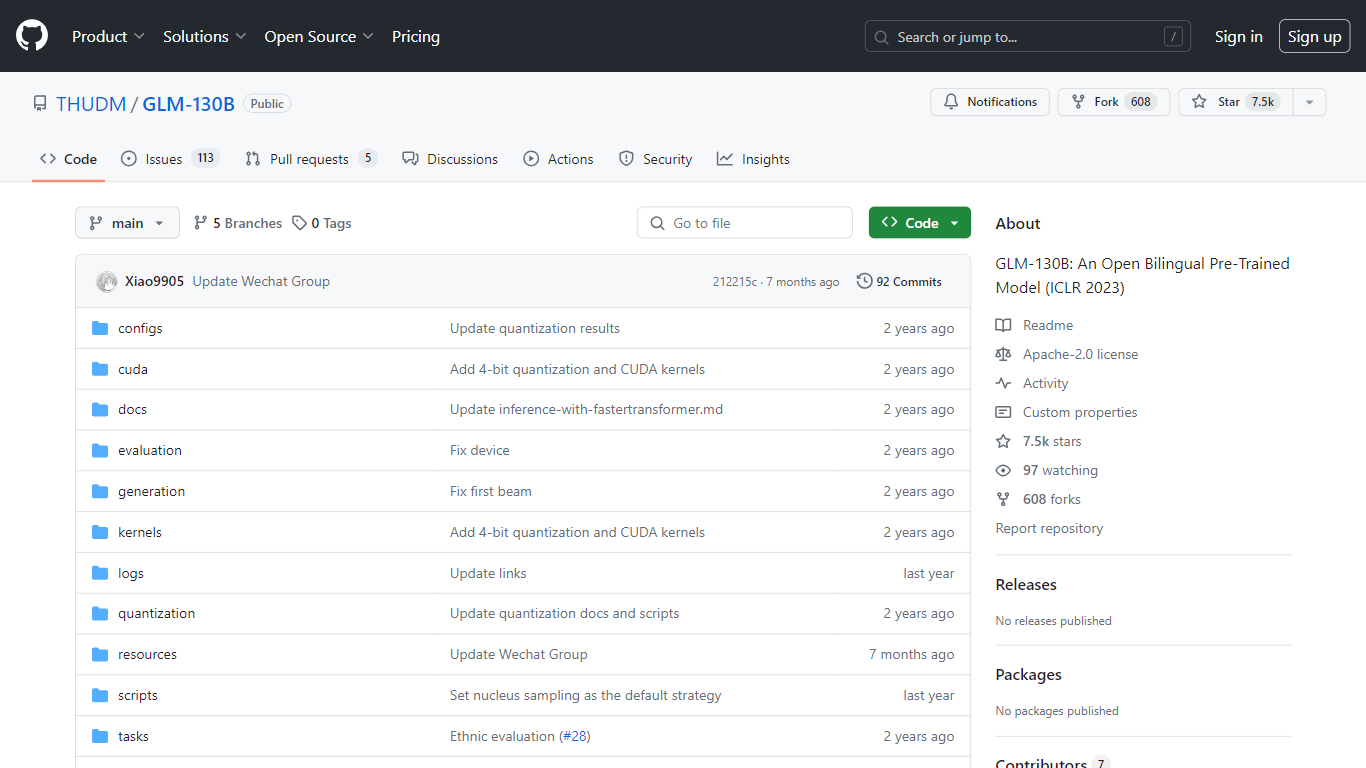

GLM-130B

What is GLM-130B?

GLM-130B, showcased at ICLR 2023, represents a groundbreaking open bilingual pre-trained model that stands out with its impressive 130 billion parameters. Developed for bidirectional dense modeling in both English and Chinese, the GLM-130B leverages the General Language Model (GLM) algorithm for pre-training and is optimized to run inference tasks on a single server setup whether it be the A100 (40G * 8) or the V100 (32G * 8). Furthermore, its compatibility with INT4 quantization means that the already modest hardware requirements can be reduced even further, allowing a server with 4 * RTX 3090 (24G) to support the model with minimal performance degradation.

As part of its training process, the GLM-130B has digested an extensive dataset consisting of over 400 billion text tokens, equally divided between Chinese and English. It boasts exceptional bilingual support, superior performance across various datasets when compared to its counterparts, and offers fast inference times. Additionally, this repository also promotes reproducibility by facilitating open-source code and model checkpoints for over 30 tasks.

Terracotta

What is Terracotta?

Terracotta is a cutting-edge platform designed to enhance the workflow for developers and researchers working with large language models (LLMs). This intuitive and user-friendly platform allows you to manage, iterate, and evaluate your fine-tuned models with ease. With Terracotta, you can securely upload data, fine-tune models for various tasks like classification and text generation, and create comprehensive evaluations to compare model performance using both qualitative and quantitative metrics. Our tool supports connections to major providers like OpenAI and Cohere, ensuring you have access to a broad range of LLM capabilities. Terracotta is the creation of Beri Kohen and Lucas Pauker, AI enthusiasts and Stanford graduates, who are dedicated to advancing LLM development. Join our email list to stay informed on the latest updates and features that Terracotta has to offer.

GLM-130B Upvotes

Terracotta Upvotes

GLM-130B Top Features

Bilingual Support: GLM-130B caters to both English and Chinese language models.

High Performance: Comprehensive benchmarks show GLM-130B outperforming rival models across diverse datasets.

Fast Inference: Utilizes SAT and FasterTransformer for rapid inference on a single A100 server.

Reproducibility: Consistent results across more than 30 tasks, thanks to open-source code and model checkpoints.

Cross-Platform Compatibility: Accommodates a range of platforms including NVIDIA, Hygon DCU, Ascend 910, and Sunway.

Terracotta Top Features

Manage Many Models: Centrally handle all your fine-tuned models in one convenient place.

Iterate Quickly: Streamline the process of model improvement with fast qualitative and quantitative evaluations.

Multiple Providers: Seamlessly integrate with services from OpenAI and Cohere to supercharge your development process.

Upload Your Data: Upload and securely store your datasets for the fine-tuning of models.

Create Evaluations: Conduct in-depth comparative assessments of model performances leveraging metrics like accuracy BLEU and confusion matrices.

GLM-130B Category

- Large Language Model (LLM)

Terracotta Category

- Large Language Model (LLM)

GLM-130B Pricing Type

- Free

Terracotta Pricing Type

- Freemium