Inferkit AI vs ggml.ai

In the battle of Inferkit AI vs ggml.ai, which AI Large Language Model (LLM) tool comes out on top? We compare reviews, pricing, alternatives, upvotes, features, and more.

Between Inferkit AI and ggml.ai, which one is superior?

Upon comparing Inferkit AI with ggml.ai, which are both AI-powered large language model (llm) tools, Inferkit AI stands out as the clear frontrunner in terms of upvotes. Inferkit AI has garnered 7 upvotes, and ggml.ai has garnered 6 upvotes.

Not your cup of tea? Upvote your preferred tool and stir things up!

Inferkit AI

What is Inferkit AI?

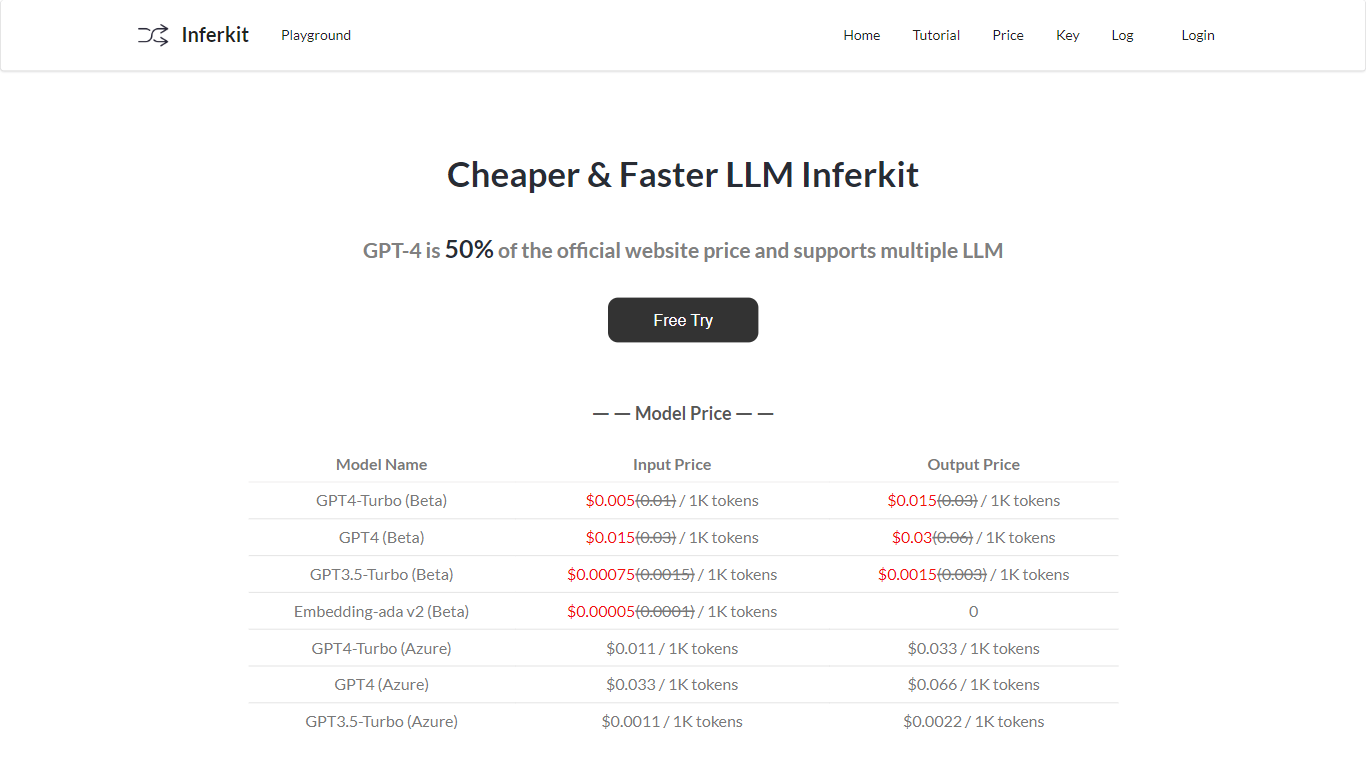

Inferkit AI introduces a revolutionary approach to AI development with its Cheaper & Faster LLM router. Aimed at developers seeking to integrate advanced AI capabilities into their products, Inferkit AI provides a robust platform featuring a suite of APIs compatible with major models like those from OpenAI. This large-scale model routing component is ingeniously designed to not only enhance the reliability of AI-based applications but also significantly reduce the costs associated with their development.

The platform's current beta phase presents an invaluable opportunity for early adopters to benefit from a generous 50% discount available on Inferkit AI's official website. This discount serves as a compelling incentive for developers to leverage the power of Inferkit AI's offerings and integrate them into their development workflows.

Simple to use yet powerful in performance, Inferkit AI is tailored to streamline the development process, offering an efficient and scalable solution for businesses and independent developers alike. With Inferkit AI, the promise of accessible, cost-effective AI technology becomes a tangible reality, empowering innovation and creativity in the realm of AI application development.

ggml.ai

What is ggml.ai?

ggml.ai is at the forefront of AI technology, bringing powerful machine learning capabilities directly to the edge with its innovative tensor library. Built for large model support and high performance on common hardware platforms, ggml.ai enables developers to implement advanced AI algorithms without the need for specialized equipment. The platform, written in the efficient C programming language, offers 16-bit float and integer quantization support, along with automatic differentiation and various built-in optimization algorithms like ADAM and L-BFGS. It boasts optimized performance for Apple Silicon and leverages AVX/AVX2 intrinsics on x86 architectures. Web-based applications can also exploit its capabilities via WebAssembly and WASM SIMD support. With its zero runtime memory allocations and absence of third-party dependencies, ggml.ai presents a minimal and efficient solution for on-device inference.

Projects like whisper.cpp and llama.cpp demonstrate the high-performance inference capabilities of ggml.ai, with whisper.cpp providing speech-to-text solutions and llama.cpp focusing on efficient inference of Meta's LLaMA large language model. Moreover, the company welcomes contributions to its codebase and supports an open-core development model through the MIT license. As ggml.ai continues to expand, it seeks talented full-time developers with a shared vision for on-device inference to join their team.

Designed to push the envelope of AI at the edge, ggml.ai is a testament to the spirit of play and innovation in the AI community.

Inferkit AI Upvotes

ggml.ai Upvotes

Inferkit AI Top Features

Cost-Effective Solution: Attractive pricing with a 50% discount during the beta phase, reducing financial barriers to advanced AI integration.

Reliable Platform: Designed for stability and dependability, ensuring smooth operation of AI functionalities within applications.

Large-Scale Model Routing: Facilitates the integration of various AI models, including those from noted providers like OpenAI.

Comprehensive API Collection: Offers a wide range of APIs to cater to diverse development needs and use cases.

Beta Phase Advantage: Early adopters get the chance to experience the platform's capabilities at a discounted rate.

ggml.ai Top Features

Written in C: Ensures high performance and compatibility across a range of platforms.

Optimization for Apple Silicon: Delivers efficient processing and lower latency on Apple devices.

Support for WebAssembly and WASM SIMD: Facilitates web applications to utilize machine learning capabilities.

No Third-Party Dependencies: Makes for an uncluttered codebase and convenient deployment.

Guided Language Output Support: Enhances human-computer interaction with more intuitive AI-generated responses.

Inferkit AI Category

- Large Language Model (LLM)

ggml.ai Category

- Large Language Model (LLM)

Inferkit AI Pricing Type

- Freemium

ggml.ai Pricing Type

- Freemium