Neuralangelo Research Reconstructs 3D Scenes | NVIDIA

Neuralangelo is an AI model developed by NVIDIA Research that transforms 2D video clips into detailed 3D structures using neural networks. It creates lifelike virtual replicas of objects such as buildings, sculptures, and complex scenes by capturing intricate details and textures from multiple viewpoints. This technology is designed for creative professionals and developers working in virtual reality, digital twins, robotics, and game development, enabling them to import high-fidelity 3D models into their design workflows.

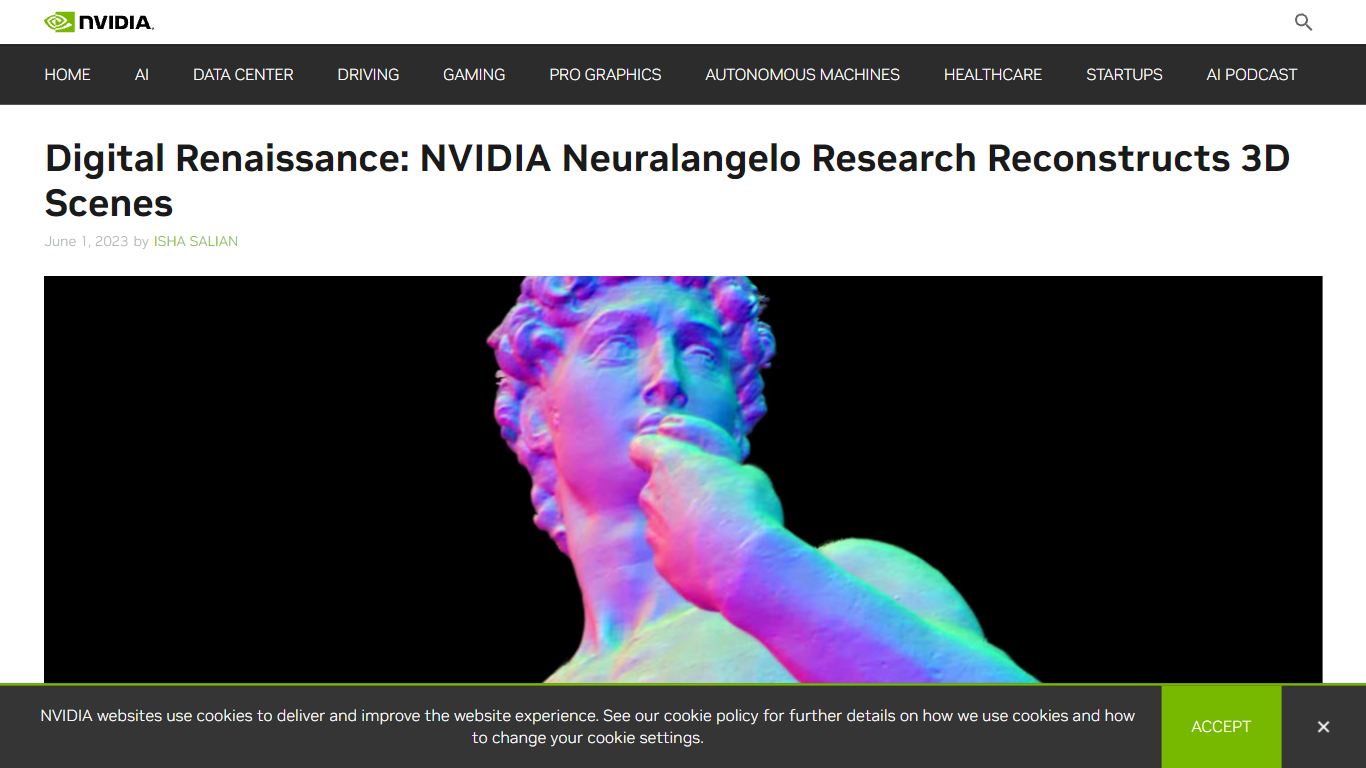

Unlike earlier methods, Neuralangelo excels at reproducing complex materials like roof shingles, glass panes, and smooth marble surfaces with high accuracy. It uses instant neural graphics primitives, the technology behind NVIDIA Instant NeRF, to capture fine details and repetitive texture patterns that were previously challenging. The model processes selected frames from videos to estimate camera positions and then builds and refines a 3D representation, much like a sculptor shaping a subject from multiple angles.

Neuralangelo supports reconstruction of both small objects and large-scale scenes, including building interiors and exteriors, demonstrated by detailed models such as Michelangelo’s David statue and NVIDIA’s Bay Area campus park. This makes it a versatile tool for industries that require realistic digital replicas of real-world environments and objects.

The model simplifies the creation of usable virtual assets from footage captured even by smartphones, speeding up workflows for artists, designers, and engineers. By bridging the gap between physical and digital worlds, Neuralangelo enhances the realism and efficiency of projects in entertainment, industrial design, and robotics.

NVIDIA has made Neuralangelo available on GitHub, encouraging developers and researchers to explore and build upon this technology. It represents a significant step forward in 3D reconstruction, combining advanced neural rendering with practical usability for a broad range of applications.

🎥 Converts 2D video clips into detailed 3D models for easy use

🏛️ Captures complex textures like glass and marble with high accuracy

🖼️ Supports reconstruction of both small objects and large scenes

📱 Works with footage from smartphones, simplifying capture process

💻 Open-source availability on GitHub for developer access

Produces high-fidelity 3D reconstructions with fine texture details

Handles a wide range of objects from sculptures to building interiors

Accessible for creative professionals using common video footage

Leverages advanced neural rendering for improved accuracy

Available as open-source, encouraging community development

Requires multiple video angles for best results, limiting single-view use

May need technical expertise to integrate into complex workflows

What types of objects can Neuralangelo reconstruct?

Neuralangelo can reconstruct a wide range of objects, from small statues like Michelangelo’s David to large-scale scenes such as building interiors and exteriors.

What input does Neuralangelo require to create 3D models?

It requires 2D video clips taken from multiple angles to capture different viewpoints of the object or scene.

Can Neuralangelo handle complex textures and materials?

Yes, it accurately reproduces complex materials like roof shingles, glass panes, and smooth marble surfaces.

Is Neuralangelo available for public use?

Yes, Neuralangelo is available on GitHub, allowing developers and researchers to access and use the model.

What industries benefit most from Neuralangelo?

Industries such as virtual reality, digital twins, robotics, game development, and industrial design benefit from its realistic 3D reconstructions.

Does Neuralangelo require specialized hardware?

While the model benefits from NVIDIA GPUs for processing, it can work with video footage captured by standard devices like smartphones.

How does Neuralangelo improve over previous 3D reconstruction methods?

It uses instant neural graphics primitives to better capture fine details and repetitive textures that prior methods struggled with.