Last updated 02-10-2024

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

AI21Labs

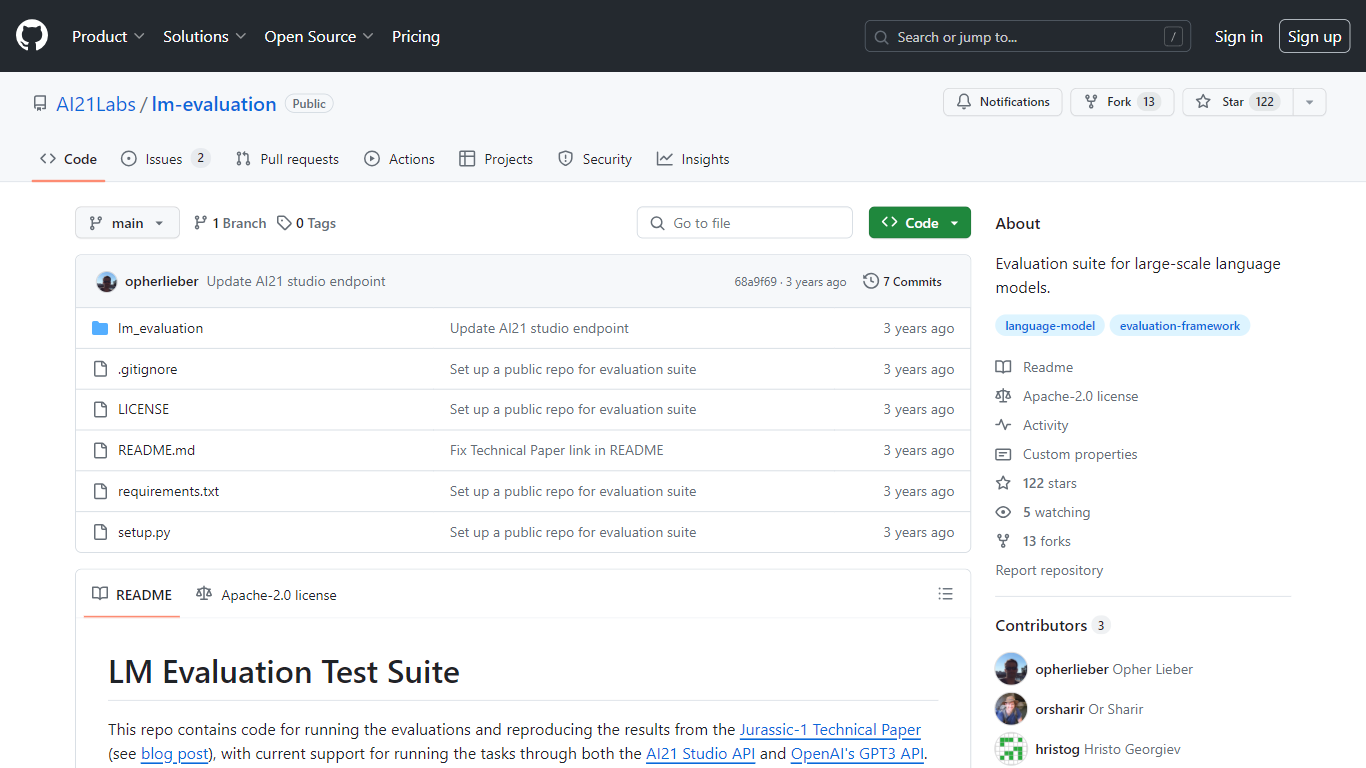

AI21Labs presents lm-evaluation, a comprehensive evaluation suite designed for assessing the performance of large-scale language models. This robust toolkit is an important resource for developers and researchers aiming to analyze and improve language model capabilities. The suite allows for the execution of a battery of tests and supports integration with both AI21 Studio API and OpenAI's GPT3 API.

Users can easily contribute to the development of this suite by participating in the open-source project and interacting with its community on GitHub. Setting up lm-evaluation is straightforward, and its flexibility enables users to test models against multiple-choice and document probability tasks, amongst others mentioned in the Jurassic-1 Technical Paper. With detailed instructions for installation, usage, and the ability to run the suite through different providers, the lm-evaluation project is prepped to accelerate the evolution of language models.

Versatile Testing: Supports a variety of tasks including multiple-choice and document probability tasks.

Multiple Providers: Compatible with AI21 Studio API and OpenAI's GPT3 API for broader applicability.

Open Source: Open for contributions and community collaboration on GitHub.

Detailed Documentation: Provides clear installation and usage guidelines.

Accessibility: Include licensing and repository insights for better project understanding and openness.

1) What is lm-evaluation?

lm-evaluation is a suite designed to evaluate the performance of large-scale language models.

2) How can I contribute to the lm-evaluation project?

You can contribute to the development of lm-evaluation by creating an account on GitHub and participating in the project.

3) Which providers' APIs are supported in lm-evaluation?

lm-evaluation supports tasks through the AI21 Studio API and OpenAI's GPT3 API.

4) How do I set up lm-evaluation?

To set up the evaluation suite, clone the repository, navigate to the lm-evaluation directory, and use pip to install dependencies.

5) What license does lm-evaluation use?

lm-evaluation is licensed under the Apache-2.0 license, ensuring open-source use and distribution.