ELECTRA vs ggml.ai

Explore the showdown between ELECTRA vs ggml.ai and find out which AI Large Language Model (LLM) tool wins. We analyze upvotes, features, reviews, pricing, alternatives, and more.

In a face-off between ELECTRA and ggml.ai, which one takes the crown?

When we contrast ELECTRA with ggml.ai, both of which are exceptional AI-operated large language model (llm) tools, and place them side by side, we can spot several crucial similarities and divergences. The upvote count reveals a draw, with both tools earning the same number of upvotes. The power is in your hands! Cast your vote and have a say in deciding the winner.

Think we got it wrong? Cast your vote and show us who's boss!

ELECTRA

What is ELECTRA?

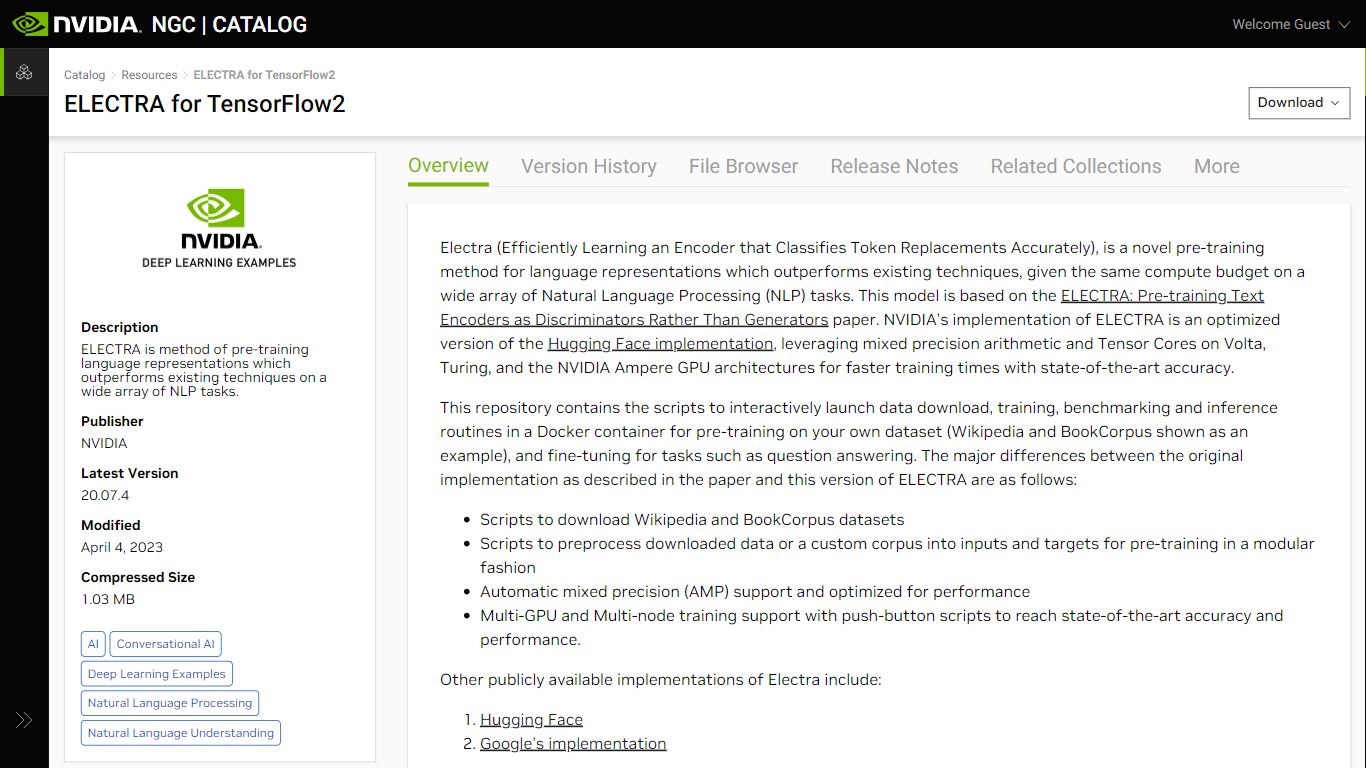

ELECTRA for TensorFlow2, available on NVIDIA NGC, represents a breakthrough in pre-training language representation for Natural Language Processing (NLP) tasks. By efficiently learning an encoder that classifies token replacements accurately, ELECTRA surpasses existing methods within the same computational budget across various NLP applications. Developed on the basis of a research paper, this model benefits significantly from the optimizations provided by NVIDIA, such as mixed precision arithmetic and Tensor Core utilizations onboard Volta, Turing, and NVIDIA Ampere GPU architectures. It not only achieves faster training times but also ensures state-of-the-art accuracy.

Understanding the architecture, ELECTRA differs from conventional models like BERT by introducing a generator-discriminator framework that identifies token replacements more efficiently—an approach inspired by generative adversarial networks (GANs). This implementation is user-friendly, offering scripts for data download, preprocessing, training, benchmarking, and inference, making it easier for researchers to work with custom datasets and fine-tune on tasks including question answering.

ggml.ai

What is ggml.ai?

ggml.ai is at the forefront of AI technology, bringing powerful machine learning capabilities directly to the edge with its innovative tensor library. Built for large model support and high performance on common hardware platforms, ggml.ai enables developers to implement advanced AI algorithms without the need for specialized equipment. The platform, written in the efficient C programming language, offers 16-bit float and integer quantization support, along with automatic differentiation and various built-in optimization algorithms like ADAM and L-BFGS. It boasts optimized performance for Apple Silicon and leverages AVX/AVX2 intrinsics on x86 architectures. Web-based applications can also exploit its capabilities via WebAssembly and WASM SIMD support. With its zero runtime memory allocations and absence of third-party dependencies, ggml.ai presents a minimal and efficient solution for on-device inference.

Projects like whisper.cpp and llama.cpp demonstrate the high-performance inference capabilities of ggml.ai, with whisper.cpp providing speech-to-text solutions and llama.cpp focusing on efficient inference of Meta's LLaMA large language model. Moreover, the company welcomes contributions to its codebase and supports an open-core development model through the MIT license. As ggml.ai continues to expand, it seeks talented full-time developers with a shared vision for on-device inference to join their team.

Designed to push the envelope of AI at the edge, ggml.ai is a testament to the spirit of play and innovation in the AI community.

ELECTRA Upvotes

ggml.ai Upvotes

ELECTRA Top Features

Mixed Precision Support: Enhanced training speed using mixed precision arithmetic on compatible NVIDIA GPU architectures.

Multi-GPU and Multi-Node Training: Supports distributed training across multiple GPUs and nodes, facilitating faster model development.

Pre-training and Fine-tuning Scripts: Includes scripts to download and preprocess datasets, enabling easy setup for pre-training and fine-tuning processes., -

Advanced Model Architecture: Integrates a generator-discriminator scheme for more effective learning of language representations.

Optimized Performance: Leverages optimizations for the Tensor Cores and Automatic Mixed Precision (AMP) for accelerated model training.

ggml.ai Top Features

Written in C: Ensures high performance and compatibility across a range of platforms.

Optimization for Apple Silicon: Delivers efficient processing and lower latency on Apple devices.

Support for WebAssembly and WASM SIMD: Facilitates web applications to utilize machine learning capabilities.

No Third-Party Dependencies: Makes for an uncluttered codebase and convenient deployment.

Guided Language Output Support: Enhances human-computer interaction with more intuitive AI-generated responses.

ELECTRA Category

- Large Language Model (LLM)

ggml.ai Category

- Large Language Model (LLM)

ELECTRA Pricing Type

- Freemium

ggml.ai Pricing Type

- Freemium