Last updated 10-23-2025

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

ELECTRA

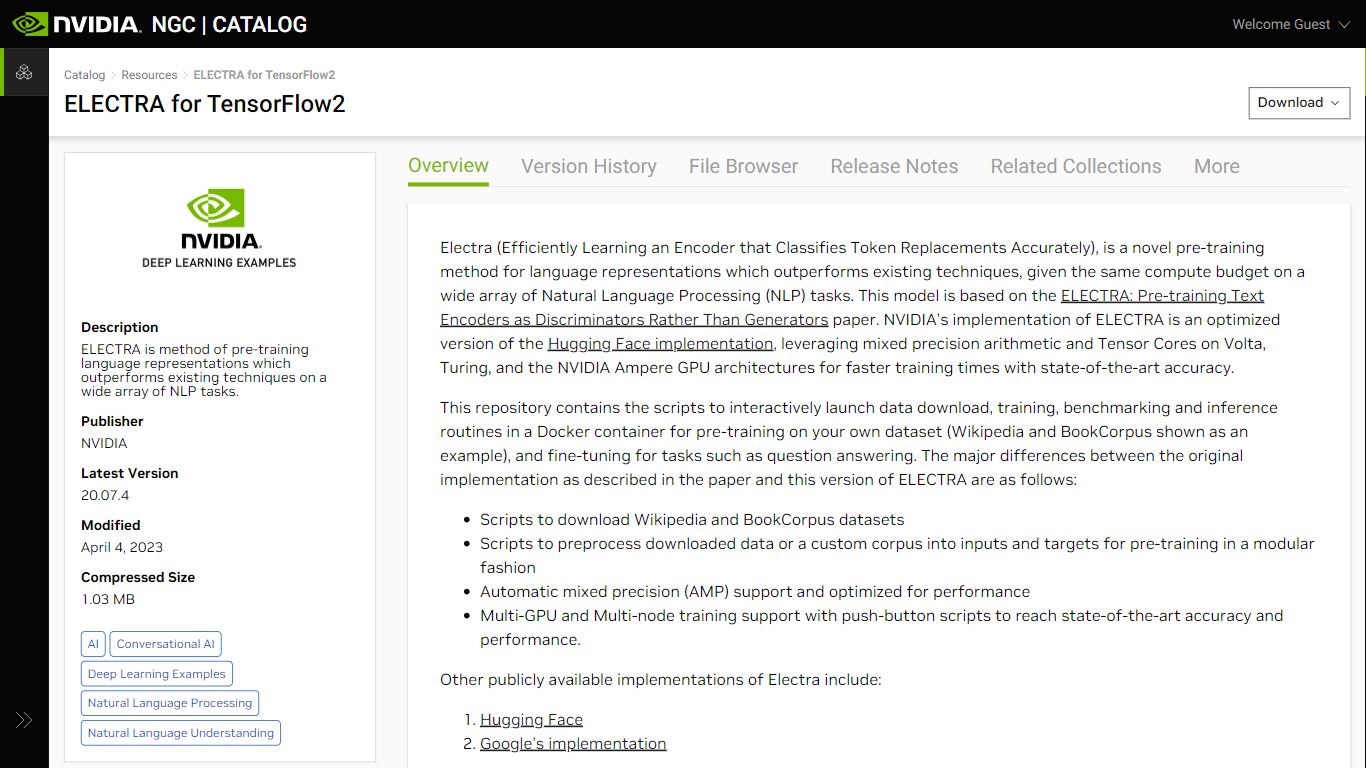

ELECTRA for TensorFlow2, available on NVIDIA NGC, represents a breakthrough in pre-training language representation for Natural Language Processing (NLP) tasks. By efficiently learning an encoder that classifies token replacements accurately, ELECTRA surpasses existing methods within the same computational budget across various NLP applications. Developed on the basis of a research paper, this model benefits significantly from the optimizations provided by NVIDIA, such as mixed precision arithmetic and Tensor Core utilizations onboard Volta, Turing, and NVIDIA Ampere GPU architectures. It not only achieves faster training times but also ensures state-of-the-art accuracy.

Understanding the architecture, ELECTRA differs from conventional models like BERT by introducing a generator-discriminator framework that identifies token replacements more efficiently—an approach inspired by generative adversarial networks (GANs). This implementation is user-friendly, offering scripts for data download, preprocessing, training, benchmarking, and inference, making it easier for researchers to work with custom datasets and fine-tune on tasks including question answering.

Mixed Precision Support: Enhanced training speed using mixed precision arithmetic on compatible NVIDIA GPU architectures.

Multi-GPU and Multi-Node Training: Supports distributed training across multiple GPUs and nodes, facilitating faster model development.

Pre-training and Fine-tuning Scripts: Includes scripts to download and preprocess datasets, enabling easy setup for pre-training and fine-tuning processes., -

Advanced Model Architecture: Integrates a generator-discriminator scheme for more effective learning of language representations.

Optimized Performance: Leverages optimizations for the Tensor Cores and Automatic Mixed Precision (AMP) for accelerated model training.

What is ELECTRA in the context of NLP?

ELECTRA is a pre-training method for language representations that uses a generator-discriminator framework to efficiently identify correct and incorrect token replacements within input sequences, thereby improving the accuracy for NLP tasks.

Why is NVIDIA's version of ELECTRA beneficial for training?

NVIDIA's optimized version of ELECTRA is specially designed to operate on Volta, Turing, and NVIDIA Ampere GPU architectures, utilizing their mixed precision and Tensor Core capabilities for accelerated training.

How do you enable Automatic Mixed Precision in ELECTRA's implementation?

To enable AMP, add the --amp flag to the training script in question. This will activate TensorFlow's Automatic Mixed Precision feature, which uses half-precision floats to speed up computation while preserving critical information with full-precision weights.

What is mixed precision training?

The mixed precision training technique combines different numerical precisions in a computation method, specifically FP16 for fast computation and FP32 for critical sections to avoid information loss, thereby speeding up the training.

What support comes with NVIDIA's ELECTRA for TensorFlow2?

Scripts for data download and preprocessing are included, as well as support for multi-GPU and multi-node training, and utilities for pre-training and fine-tuning using a Docker container, among others.