XLM vs ggml.ai

In the contest of XLM vs ggml.ai, which AI Large Language Model (LLM) tool is the champion? We evaluate pricing, alternatives, upvotes, features, reviews, and more.

If you had to choose between XLM and ggml.ai, which one would you go for?

When we examine XLM and ggml.ai, both of which are AI-enabled large language model (llm) tools, what unique characteristics do we discover? Neither tool takes the lead, as they both have the same upvote count. Since other aitools.fyi users could decide the winner, the ball is in your court now to cast your vote and help us determine the winner.

Not your cup of tea? Upvote your preferred tool and stir things up!

XLM

What is XLM?

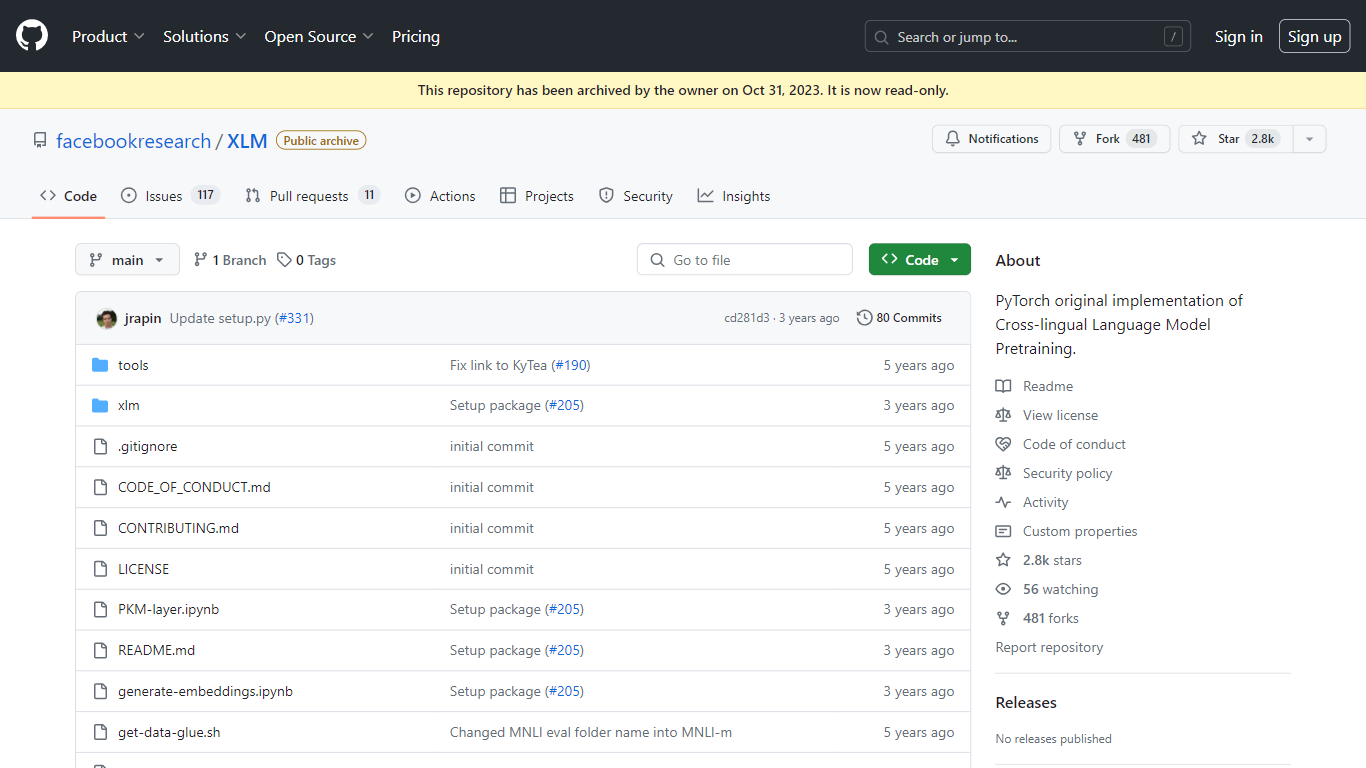

Discover the power of Cross-lingual Language Modeling with XLM, the original PyTorch implementation designed for pretraining language models that operate across multiple languages. XLM is a repository that provides the foundation for creating multilingual NLP systems using techniques such as Masked Language Modeling (MLM) and Translation Language Modeling (TLM). These methods facilitate the learning of language representations that generalize well to various linguistic tasks, enabling better understanding and processing of text in a variety of languages.

With XLM, researchers and developers can harness the benefits of transfer learning in the context of Natural Language Processing (NLP) for languages with limited training data, by leveraging existing data from high-resource languages. The repository was archived on October 31, 2023, and is now in a read-only state, making it an essential resource for historical reference and study in the field of cross-lingual language model pretraining.

ggml.ai

What is ggml.ai?

ggml.ai is at the forefront of AI technology, bringing powerful machine learning capabilities directly to the edge with its innovative tensor library. Built for large model support and high performance on common hardware platforms, ggml.ai enables developers to implement advanced AI algorithms without the need for specialized equipment. The platform, written in the efficient C programming language, offers 16-bit float and integer quantization support, along with automatic differentiation and various built-in optimization algorithms like ADAM and L-BFGS. It boasts optimized performance for Apple Silicon and leverages AVX/AVX2 intrinsics on x86 architectures. Web-based applications can also exploit its capabilities via WebAssembly and WASM SIMD support. With its zero runtime memory allocations and absence of third-party dependencies, ggml.ai presents a minimal and efficient solution for on-device inference.

Projects like whisper.cpp and llama.cpp demonstrate the high-performance inference capabilities of ggml.ai, with whisper.cpp providing speech-to-text solutions and llama.cpp focusing on efficient inference of Meta's LLaMA large language model. Moreover, the company welcomes contributions to its codebase and supports an open-core development model through the MIT license. As ggml.ai continues to expand, it seeks talented full-time developers with a shared vision for on-device inference to join their team.

Designed to push the envelope of AI at the edge, ggml.ai is a testament to the spirit of play and innovation in the AI community.

XLM Upvotes

ggml.ai Upvotes

XLM Top Features

Cross-lingual Model Pretraining: Implementation of pretraining methods like Masked Language Model and Translation Language Model for multilingual NLP performance.

Machine Translation: Tools and scripts to train Supervised and Unsupervised Machine Translation models.

Fine-tuning Capabilities: Support for fine-tuning on GLUE and XNLI benchmarks for enhanced model performance.

Multi-GPU and Multi-node Training: Capable of scaling up the training process across multiple GPUs and nodes for efficient computation.

Additional Resources: Includes utilities for language pretraining such as fastBPE for BPE codes and Moses tokenizer.

ggml.ai Top Features

Written in C: Ensures high performance and compatibility across a range of platforms.

Optimization for Apple Silicon: Delivers efficient processing and lower latency on Apple devices.

Support for WebAssembly and WASM SIMD: Facilitates web applications to utilize machine learning capabilities.

No Third-Party Dependencies: Makes for an uncluttered codebase and convenient deployment.

Guided Language Output Support: Enhances human-computer interaction with more intuitive AI-generated responses.

XLM Category

- Large Language Model (LLM)

ggml.ai Category

- Large Language Model (LLM)

XLM Pricing Type

- Freemium

ggml.ai Pricing Type

- Freemium