Last updated 02-12-2024

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

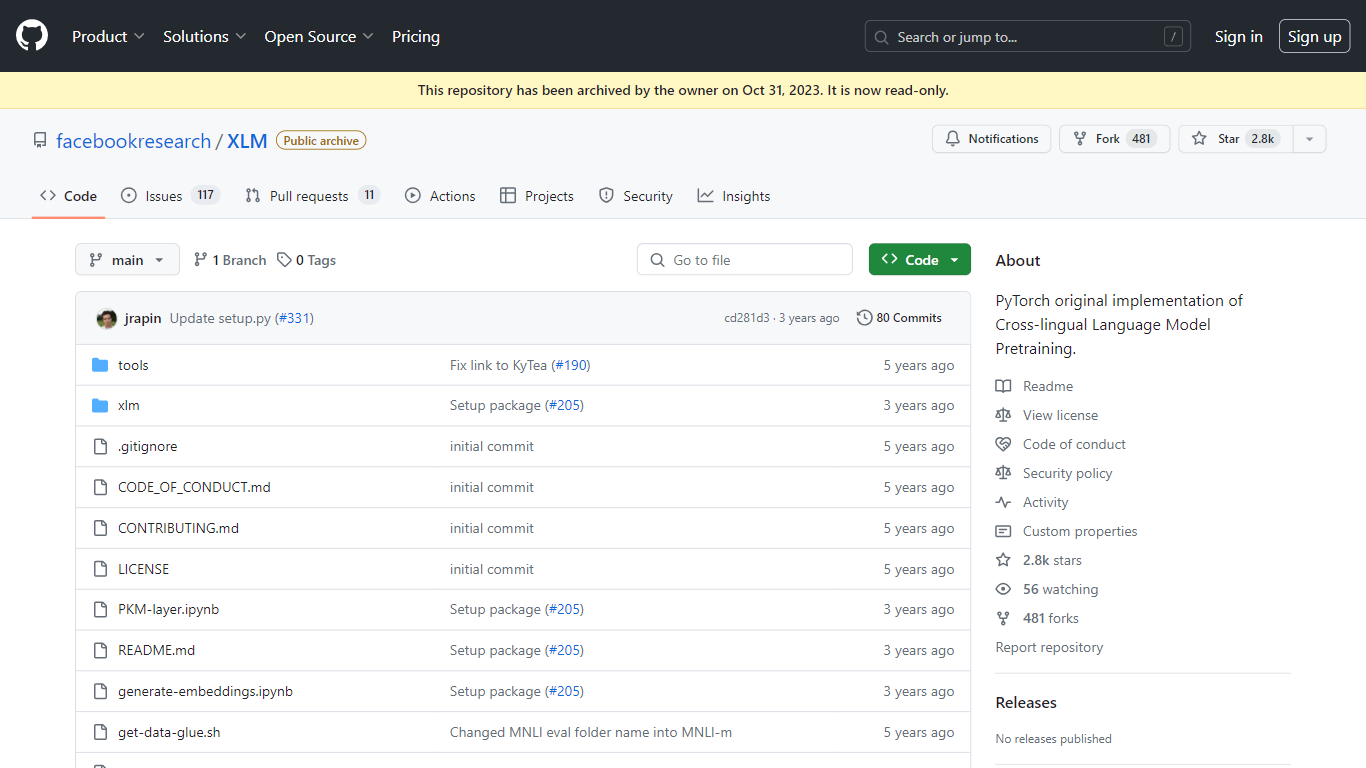

XLM

Discover the power of Cross-lingual Language Modeling with XLM, the original PyTorch implementation designed for pretraining language models that operate across multiple languages. XLM is a repository that provides the foundation for creating multilingual NLP systems using techniques such as Masked Language Modeling (MLM) and Translation Language Modeling (TLM). These methods facilitate the learning of language representations that generalize well to various linguistic tasks, enabling better understanding and processing of text in a variety of languages.

With XLM, researchers and developers can harness the benefits of transfer learning in the context of Natural Language Processing (NLP) for languages with limited training data, by leveraging existing data from high-resource languages. The repository was archived on October 31, 2023, and is now in a read-only state, making it an essential resource for historical reference and study in the field of cross-lingual language model pretraining.

Cross-lingual Model Pretraining: Implementation of pretraining methods like Masked Language Model and Translation Language Model for multilingual NLP performance.

Machine Translation: Tools and scripts to train Supervised and Unsupervised Machine Translation models.

Fine-tuning Capabilities: Support for fine-tuning on GLUE and XNLI benchmarks for enhanced model performance.

Multi-GPU and Multi-node Training: Capable of scaling up the training process across multiple GPUs and nodes for efficient computation.

Additional Resources: Includes utilities for language pretraining such as fastBPE for BPE codes and Moses tokenizer.

1) What is the main purpose of the XLM repository?

The main purpose of the XLM repository is to provide the PyTorch original implementation of Cross-lingual Language Model Pretraining, which helps in training language models to understand and process multiple languages.

2) Can I still use the XLM repository for my projects?

While the repository is archived and in a read-only state as of October 31, 2023, you can still reference and study the code, but you cannot push changes or contribute to it.

3) What techniques are included in XLM for language model pretraining?

XLM includes techniques like Monolingual Language Model Pretraining (BERT), Cross-lingual Language Model Pretraining (XLM), and applications for Supervised and Unsupervised Machine Translation.

4) Does XLM support multi-GPU training?

Yes, XLM supports multi-GPU and multi-node training which allows scaling the computational resources for training complex models.

5) What benchmarks can XLM's pre-trained models be fine-tuned on?

XLM's pre-trained models can be fine-tuned on benchmarks like General Language Understanding Evaluation (GLUE) and Cross-lingual Natural Language Inference (XNLI).