Last updated 02-10-2024

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

PaLM-E

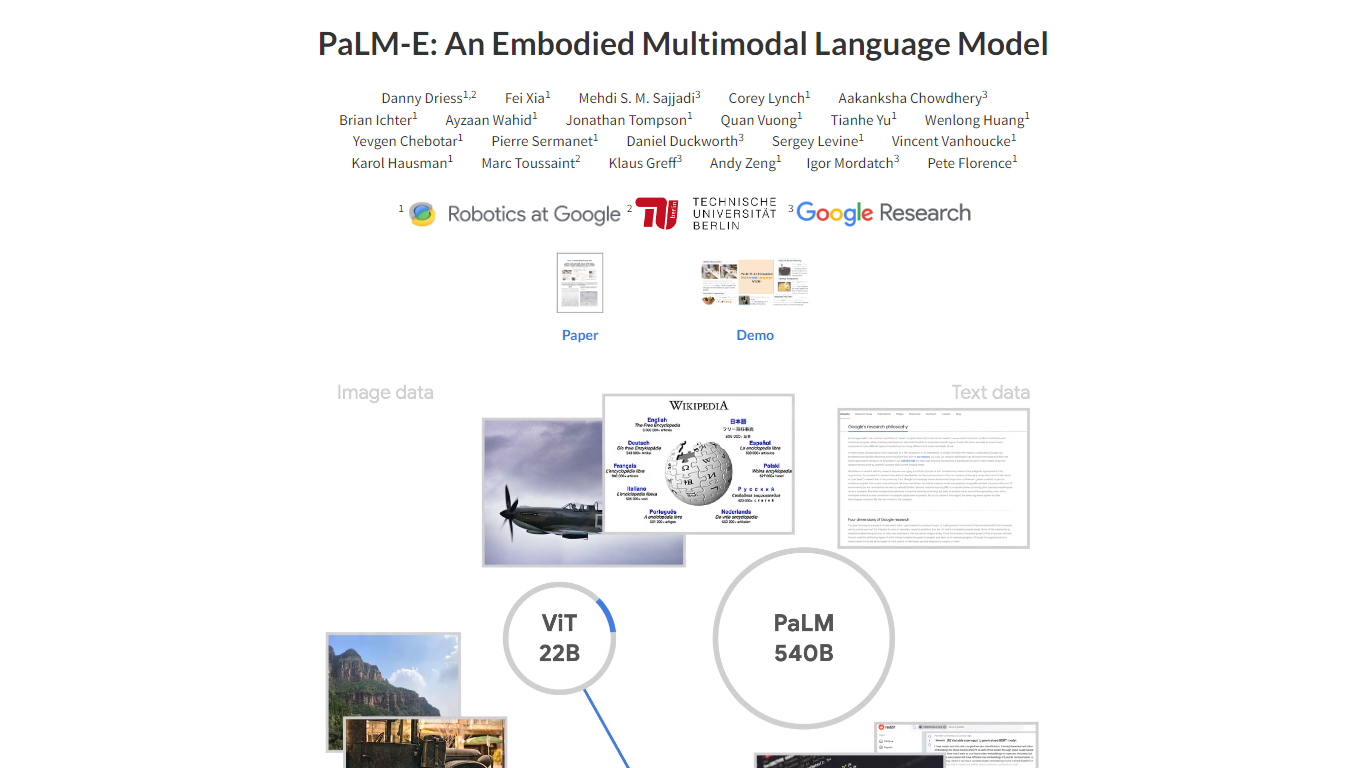

The PaLM-E project introduces an innovative Embodied Multimodal Language Model, which integrates real-world sensor data with linguistic models for advanced robotic tasks. PaLM-E, short for "Projection-based Language Model embodied," fuses textual inputs with continuous sensory information, such as visual and state estimation data, to create a comprehensive understanding and interaction in the physical world.

Designed to aid in tasks like robotic manipulation planning, visual question answering, and captioning, PaLM-E showcases the potential of large, multimodal language models trained on varied tasks across domains. With its largest iteration, PaLM-E-562B, boasting 562 billion parameters, the model not only excels in robotic tasks but also achieves state-of-the-art performance in visual-language tasks like OK-VQA, while maintaining robust general language skills.

End-to-End Training: Integrates sensor modalities with text in multimodal sentences, training alongside a pre-trained large language model.

Embodied Multimodal Capabilities: Addresses various real-world tasks, combining vision, language, and state estimation.

Variety of Observation Modalities: Works with different types of sensor input, adapting to multiple robotic embodiments.

Positive Transfer Learning: Benefits from training across diverse language and visual-language datasets.

Scalability and Specialization: The PaLM-E-562B model specializes in visual-language performance while retaining broad language capabilities.

1) What is the goal of the PaLM-E project?

The PaLM-E project aims to enable robots to understand and perform complex tasks by integrating real-world continuous sensor modalities with language models.

2) What is the achievement of the PaLM-E-562B model?

The PaLM-E-562B model, with 562 billion parameters, demonstrates state-of-the-art performance on visual-language tasks like OK-VQA while retaining versatile language abilities.

3) What does PaLM-E stand for?

PaLM-E stands for Projection-based Language Model Embodied, where PaLM refers to the pre-trained language model used.

4) Does PaLM-E benefit from transfer learning?

Yes, PaLM-E achieved positive transfer learning benefits by being trained across diverse internet-scale language, vision, and visual-language domains.

5) What tasks has PaLM-E been trained to perform?

Robotic manipulation planning, visual question answering, and captioning are some of the tasks that PaLM-E has been trained for.