Last updated 10-23-2025

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

RLAMA

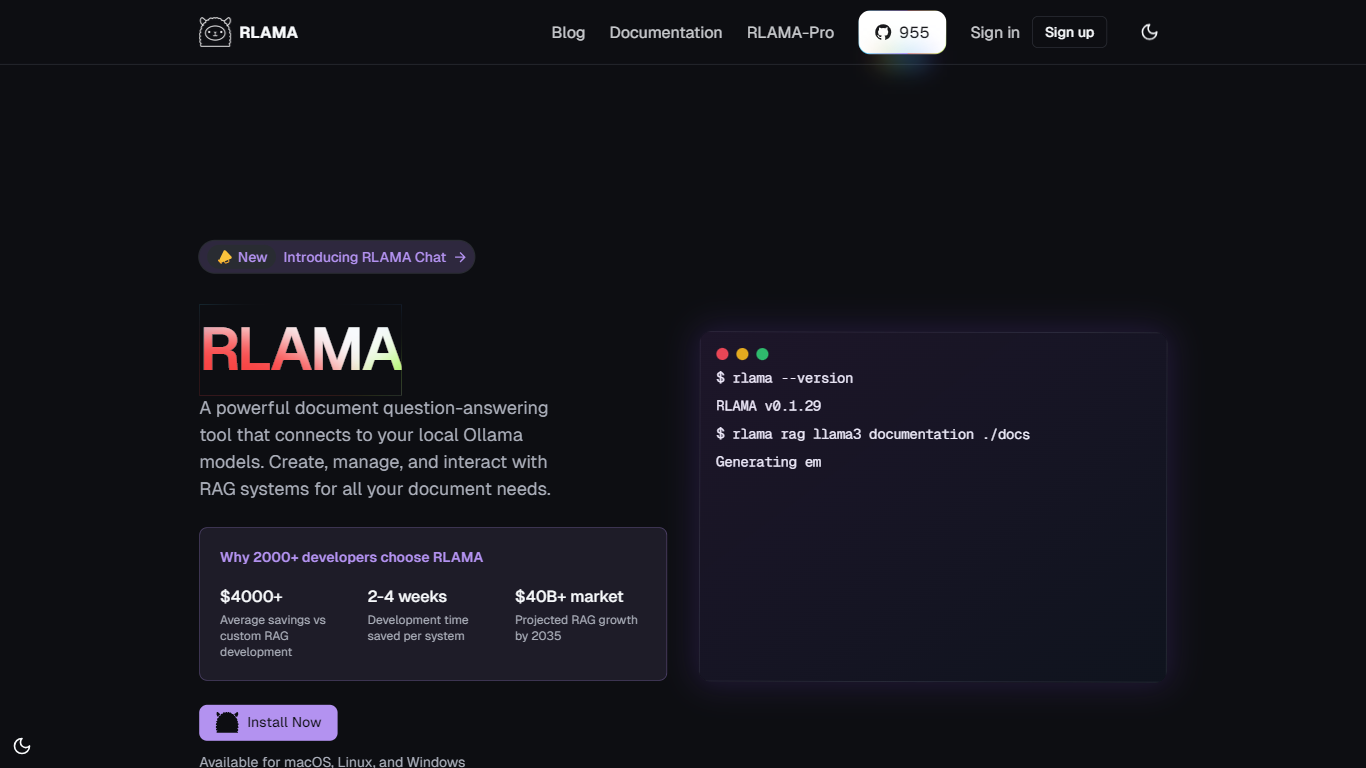

RLAMA is a powerful document question-answering tool designed to connect seamlessly with local Ollama models. It allows users to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored specifically for their documentation needs. The core functionality of RLAMA lies in its ability to provide advanced features that go beyond basic RAG, enabling users to integrate documents effortlessly into their workflows. This makes it an ideal solution for developers and organizations looking to enhance their document management processes.

The target audience for RLAMA includes developers, researchers, and organizations that require efficient document handling and question-answering capabilities. With over 2000 developers already choosing RLAMA, it has proven to be a reliable tool in the market. The unique value proposition of RLAMA is its open-source nature, which allows users to customize and adapt the tool to their specific requirements without incurring high costs associated with custom RAG development.

One of the key differentiators of RLAMA is its offline-first approach, ensuring that all processing is done locally without sending data to external servers. This feature not only enhances privacy but also improves performance by reducing latency. Additionally, RLAMA supports multiple document formats, including PDFs, Markdown, and text files, making it versatile for various use cases. The intelligent chunking feature further optimizes context retrieval, ensuring that users get the most relevant information from their documents.

Technical implementation details highlight that RLAMA is available for macOS, Linux, and Windows, making it accessible to a wide range of users. The tool also offers a visual RAG builder, allowing users to create powerful RAG systems in minutes without the need for coding. This intuitive interface is designed to make RAG creation accessible to everyone, regardless of their technical background. With RLAMA, users can expect to save significant development time and costs while building robust document-based question-answering systems.

Simple Setup: Create and configure RAG systems with just a few commands and minimal setup, making it easy for anyone to get started quickly.

Multiple Document Formats: Supports various formats like PDFs, Markdown, and text files, allowing users to work with their preferred document types.

Offline First: Ensures 100% local processing with no data sent to external servers, enhancing privacy and security for sensitive information.

Intelligent Chunking: Automatically segments documents for optimal context retrieval, helping users find the most relevant answers efficiently.

Visual RAG Builder: Create powerful RAG systems visually in just 2 minutes without writing any code, making it accessible to all users.

What is RLAMA?

RLAMA is a document question-answering tool that connects to local Ollama models, allowing users to create and manage Retrieval-Augmented Generation systems for their documentation.

What platforms is RLAMA available on?

RLAMA is available for macOS, Linux, and Windows, making it accessible to a wide range of users.

Can I use RLAMA offline?

Yes, RLAMA processes all data locally, ensuring that no information is sent to external servers.

What document formats does RLAMA support?

RLAMA supports multiple document formats, including PDFs, Markdown, and text files, allowing users to work with various types of documents.

Is RLAMA free to use?

Yes, RLAMA is completely free and open source, allowing users to create powerful RAG systems without any cost.

How long does it take to set up a RAG system with RLAMA?

Setting up a RAG system with RLAMA can be done in just a few commands and minimal setup, making it quick and easy.

What is the visual RAG builder?

The visual RAG builder is a feature that allows users to create RAG systems without coding, making it accessible to everyone.