Last updated 02-10-2024

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

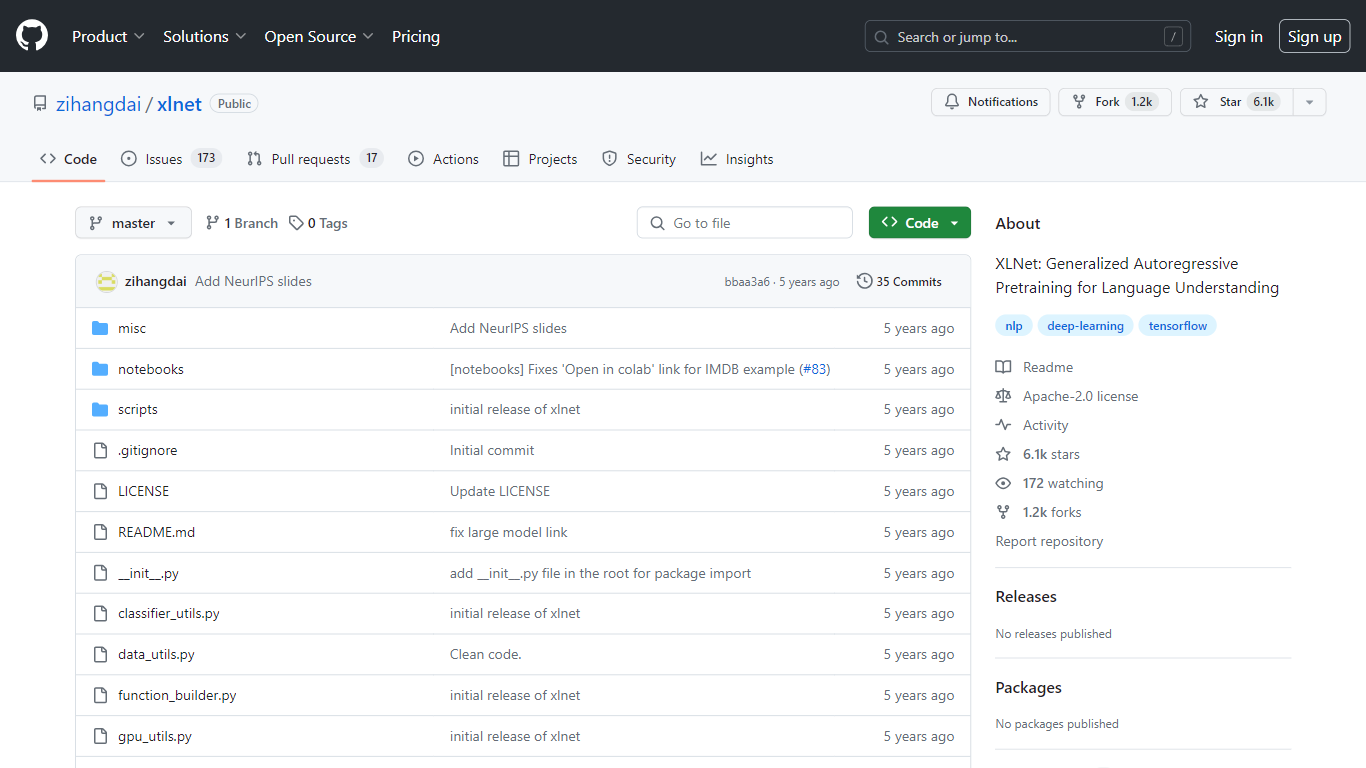

XLNet

XLNet is a ground-breaking unsupervised language pretraining approach developed by researchers, including Zhilin Yang and Zihang Dai. It introduces a Generalized Autoregressive Pretraining method that allows for state-of-the-art performance on various language understanding tasks. As an improvement over its predecessor, BERT, XLNet incorporates the Transformer-XL architecture, which is particularly adept at handling long-range dependencies in text. This repository, managed by Zihang Dai on GitHub, offers access to the XLNet model, with supporting code and documentation for researchers and AI practitioners to utilize and potentially contribute to the ongoing progress in language models.

Generalized Autoregressive Pretraining: Leverages an advanced method for unsupervised language representation learning.

Transformer-XL Backbone: Utilizes this architecture for enhanced handling of long-context tasks.

State-Of-The-Art Results: Achieves leading performance across numerous language understanding benchmarks.

Versatile Application: Applicable to tasks including question answering and sentiment analysis.

Active Repository: Allows for community contributions and development, fostering ongoing improvements.

1) What is XLNet?

XLNet is a new unsupervised language representation learning method, which outperforms BERT on various benchmarks.

2) What technology does XLNet use in its architecture?

XLNet employs the Transformer-XL as its underlying architecture, making it effective in tasks that involve long-range context.

3) Where can I find more information on the technical details of XLNet?

Research papers providing a detailed description, including 'XLNet: Generalized Autoregressive Pretraining for Language Understanding' by Zhilin Yang, Zihang Dai, et al.

4) Is there a GitHub repository available for XLNet?

Yes, the repository on GitHub by zihangdai includes the necessary code for utilizing and contributing to XLNet.

5) How does XLNet compare with BERT in terms of performance?

As of the last update, XLNet has surpassed BERT's performance on 20 tasks and achieved state-of-the-art results on 18 of those.