Last updated 10-23-2025

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

Chain of Thought Prompting

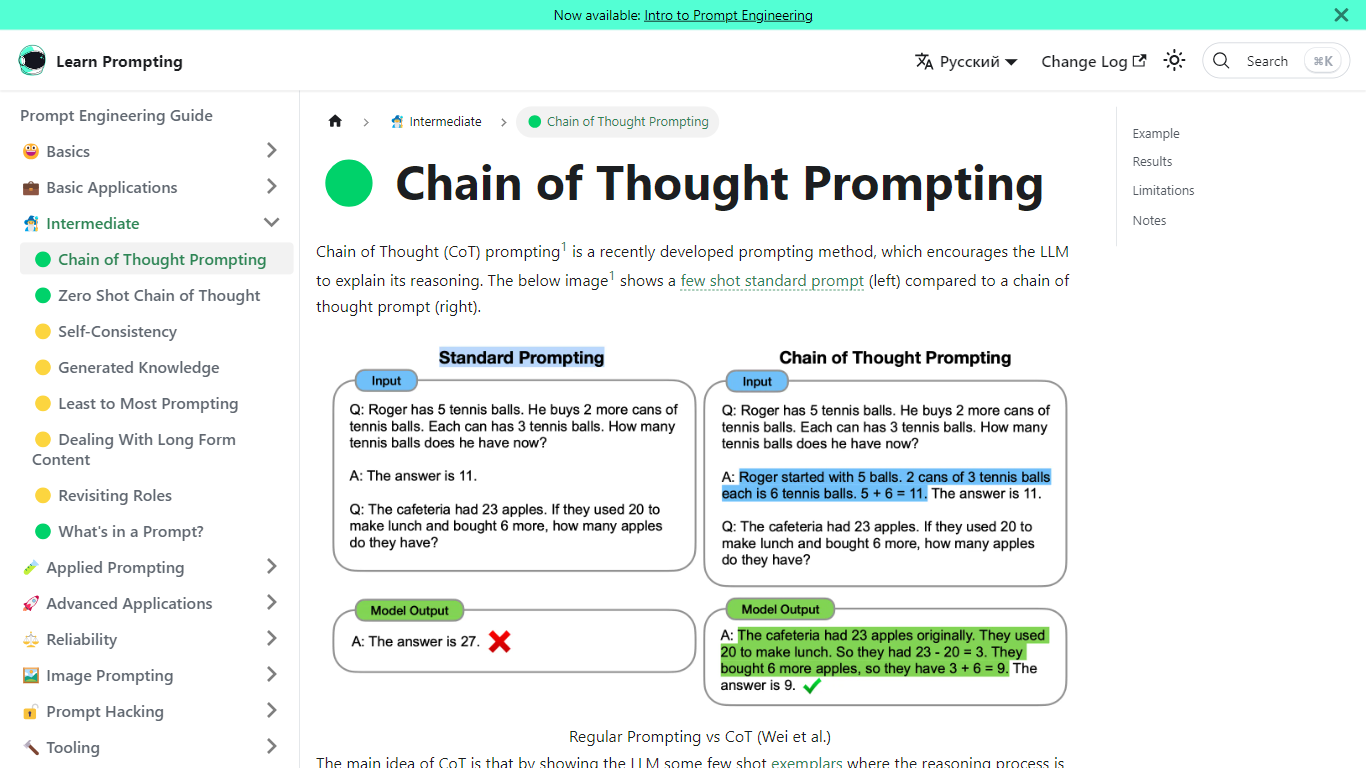

Chain of Thought Prompting is an innovative approach to enhance interaction with Large Language Models (LLMs), enabling them to provide detailed explanations of their reasoning processes. This method, highlighted in the work by Wei et al., shows considerable promise in improving the accuracy of AI responses in various tasks such as arithmetic, commonsense understanding, and symbolic reasoning. Through examples and comparative analysis, readers can understand the advantages of this approach, especially when applied to larger models with around 100 billion parameters or more. However, it's noted that smaller models do not benefit as much and may produce less logical outputs. The content offers insights into the technique's intricacies and its limitations, making it a valuable resource for anyone looking to delve into the world of AI and Prompt Engineering.

Improved Accuracy: Chain of Thought Prompting leads to more accurate results in AI tasks.

Explanation of Reasoning: Encourages LLMs to detail their thought process.

Effective for Large Models: Best performance gains with models of approx. 100B parameters.

Comparative Analysis: Benchmarked results, including GSM8K benchmark performance.

Practical Examples: Demonstrations of CoT prompting with GPT-3.

What is Chain of Thought Prompting?

Chain of Thought Prompting is a method that makes AI models explain their reasoning, which often results in more accurate AI tasks like arithmetic and commonsense reasoning.

Which models benefit most from Chain of Thought Prompting?

It is particularly effective when used with large language models of around 100 billion parameters, as seen with prompted PaLM 540B.

How does Chain of Thought Prompting work?

It encourages the AI model to show its reasoning in a step-by-step manner by using few-shot exemplars where the reasoning process is clearly explained.

Are there any limitations to Chain of Thought Prompting?

According to the research by Wei et al., smaller models may generate less logical chains of thought, leading to poorer performance compared to standard prompting methods.

Are there any courses available to learn about Prompt Engineering?

Yes, you can join the Intro to Prompt Engineering and the Advanced Prompt Engineering courses to learn more about crafting efficient prompts.