phi-2 vs ggml.ai

Explore the showdown between phi-2 vs ggml.ai and find out which AI Large Language Model (LLM) tool wins. We analyze upvotes, features, reviews, pricing, alternatives, and more.

In a face-off between phi-2 and ggml.ai, which one takes the crown?

When we contrast phi-2 with ggml.ai, both of which are exceptional AI-operated large language model (llm) tools, and place them side by side, we can spot several crucial similarities and divergences. The upvote count reveals a draw, with both tools earning the same number of upvotes. Every vote counts! Cast yours and contribute to the decision of the winner.

Think we got it wrong? Cast your vote and show us who's boss!

phi-2

What is phi-2?

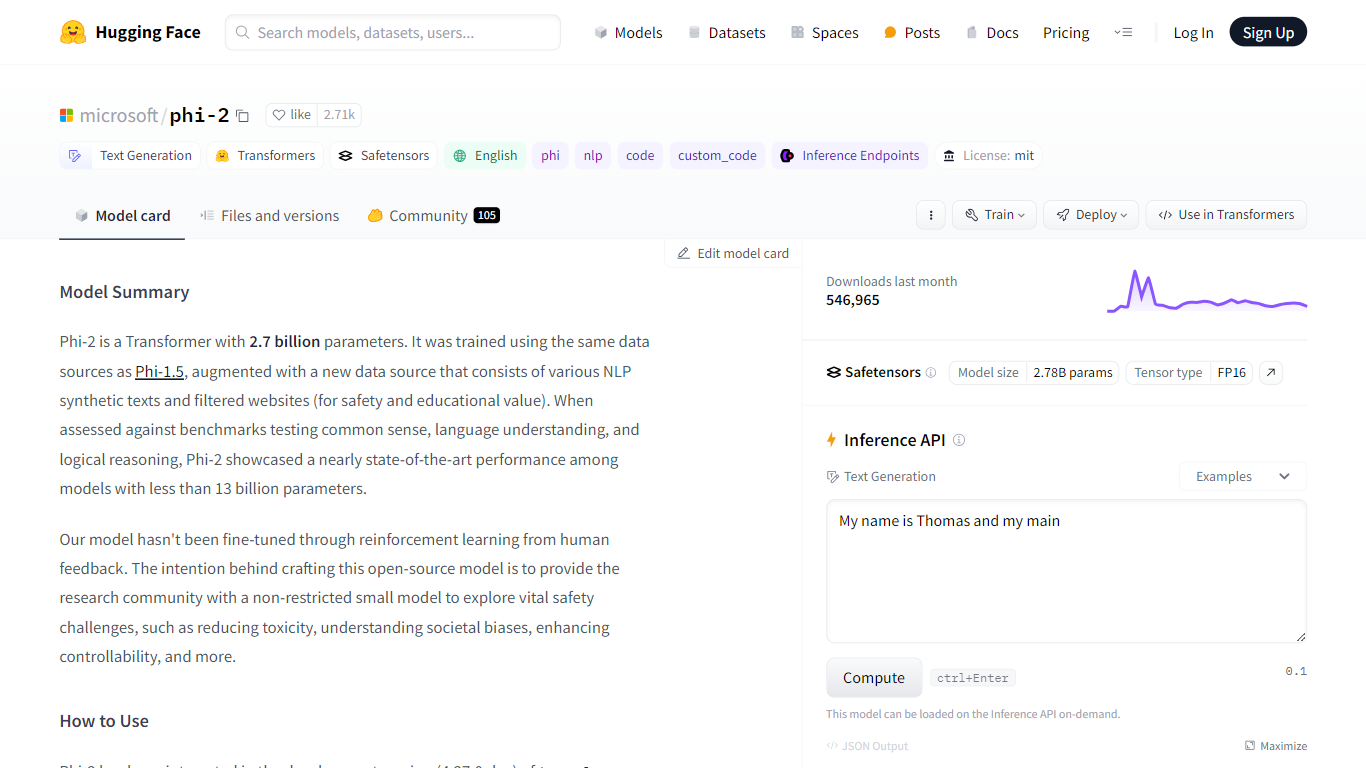

Microsoft's Phi-2, hosted on Hugging Face, represents a leap forward in the field of artificial intelligence with its substantial 2.7 billion parameters. The Transformer-based model, Phi-2, was meticulously trained on a diverse dataset encompassing both synthetic NLP texts and carefully filtered web sources to ensure safety and educational value. Phi-2 excels in benchmarks for common sense, language understanding, and logical reasoning, setting a high standard for models in its class.

This cutting-edge tool is designed primarily for text generation in English, providing a potent resource for NLP and coding tasks. Despite its capabilities, Phi-2 is recommended as a base for further development rather than a turnkey solution, and users are encouraged to be vigilant for potential biases and to verify outputs for accuracy. The model is available for integration with the latest transformers library and carries the permissive MIT license, promoting open science and democratization of AI.

ggml.ai

What is ggml.ai?

ggml.ai is at the forefront of AI technology, bringing powerful machine learning capabilities directly to the edge with its innovative tensor library. Built for large model support and high performance on common hardware platforms, ggml.ai enables developers to implement advanced AI algorithms without the need for specialized equipment. The platform, written in the efficient C programming language, offers 16-bit float and integer quantization support, along with automatic differentiation and various built-in optimization algorithms like ADAM and L-BFGS. It boasts optimized performance for Apple Silicon and leverages AVX/AVX2 intrinsics on x86 architectures. Web-based applications can also exploit its capabilities via WebAssembly and WASM SIMD support. With its zero runtime memory allocations and absence of third-party dependencies, ggml.ai presents a minimal and efficient solution for on-device inference.

Projects like whisper.cpp and llama.cpp demonstrate the high-performance inference capabilities of ggml.ai, with whisper.cpp providing speech-to-text solutions and llama.cpp focusing on efficient inference of Meta's LLaMA large language model. Moreover, the company welcomes contributions to its codebase and supports an open-core development model through the MIT license. As ggml.ai continues to expand, it seeks talented full-time developers with a shared vision for on-device inference to join their team.

Designed to push the envelope of AI at the edge, ggml.ai is a testament to the spirit of play and innovation in the AI community.

phi-2 Upvotes

ggml.ai Upvotes

phi-2 Top Features

Model Architecture: Phi-2 is a Transformer-based model with 2.7 billion parameters, known for its performance in language understanding and logical reasoning.

How to Use: Users can integrate Phi-2 with the development version of the transformers library by ensuring

trust_remote_code=Trueis used and checking for the correct transformers version.Intended Uses: Ideal for QA, chat, and code formats, Phi-2 is versatile for various prompts, although its outputs should be seen as starting points for user refinement.

Limitations and Caution: While powerful, Phi-2 has its limitations such as potential inaccurate code or fact generation, societal biases, and verbosity, which users should keep in mind.

Training and Dataset: The model was trained on a colossal 250B token dataset, using 96xA100-80G GPUs over two weeks, showcasing its technical prowess.

ggml.ai Top Features

Written in C: Ensures high performance and compatibility across a range of platforms.

Optimization for Apple Silicon: Delivers efficient processing and lower latency on Apple devices.

Support for WebAssembly and WASM SIMD: Facilitates web applications to utilize machine learning capabilities.

No Third-Party Dependencies: Makes for an uncluttered codebase and convenient deployment.

Guided Language Output Support: Enhances human-computer interaction with more intuitive AI-generated responses.

phi-2 Category

- Large Language Model (LLM)

ggml.ai Category

- Large Language Model (LLM)

phi-2 Pricing Type

- Freemium

ggml.ai Pricing Type

- Freemium