Last updated 10-23-2025

Category:

Reviews:

Join thousands of AI enthusiasts in the World of AI!

LiteLLM

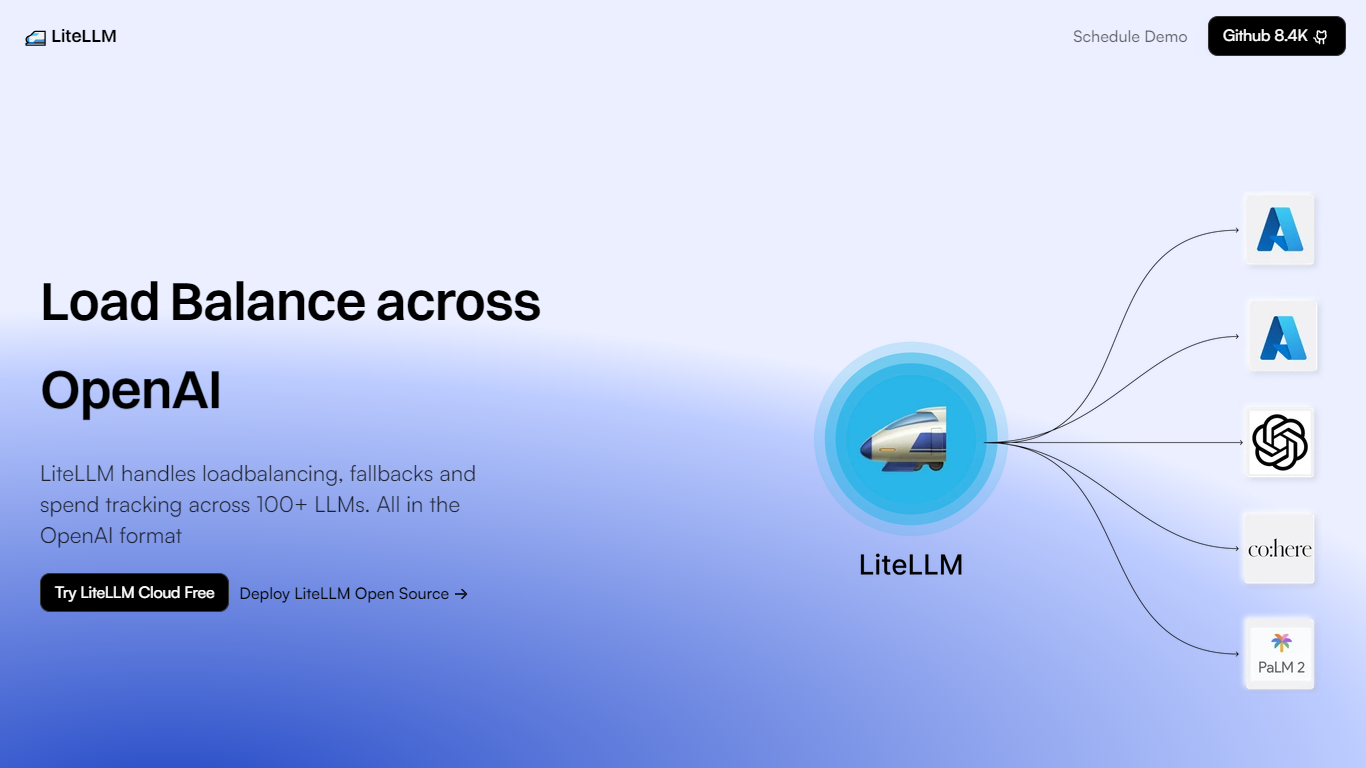

LiteLLM is an innovative platform that specializes in managing large language models (LLMs) effectively for businesses and developers. It streamlines the complex tasks associated with these models by offering load balancing, fallback solutions, and expenditure tracking across more than 100 different LLMs while maintaining the standard OpenAI format compatibility.

This makes integrating and operating multiple LLMs more efficient, reliable, and cost-effective, as it provides features such as adding models, balancing the load between different compute resources, creating keys for access control, and tracking spend to manage budgets better. With LiteLLM, clients have the option of trying their cloud service for free or deploying their open-source solution.

The platform is backed by a robust community, evident from its GitHub repository that has garnered 8.4k stars, over 40,000 Docker pulls, and over 20 million requests served with a 99% uptime. Assistance from over 150 contributors ensures that LiteLLM continues to evolve and meet the demands of users across various sectors looking to leverage the power of LLMs.

Load Balancing: Efficiently distributes LLM tasks across various platforms including Azure, Vertex AI, and Bedrock.

Fallback Solutions: Ensures continuity of service with fallback mechanisms.

Spend Tracking: Monitors and manages spending on LLM operations.

OpenAI Format Compatibility: Maintains standard OpenAI format for seamless integration.

Community Support: Backed by a strong community of over 150 contributors with resources and documentation.

What does LiteLLM specialize in?

LiteLLM specializes in load balancing, fallback solutions, and spend tracking for over 100 large language models.

Does LiteLLM offer a free trial or an open-source solution?

Yes, LiteLLM offers a free trial of its cloud service and allows for deploying an open-source solution.

How reliable is LiteLLM in terms of uptime?

LiteLLM boasts a reliable 99% uptime, ensuring consistent availability of services.

How can I get started with LiteLLM or understand more about its community?

You can reach out to the LiteLLM team for a demo or download from GitHub where it has over 8.4k stars, indicating a strong community involvement.

How many requests has LiteLLM served?

LiteLLM has served over 20 million requests, reflecting its capacity and robustness in handling LLM operations.